Different Types of Machine Learning Models

Machine Learning involves finding connections or correlations between input and output data. If you were to ask about the various types of machine learning algorithms, you would receive a list of answers, including Classification and Regression, Supervised and Unsupervised, Probabilistic and Non-probabilistic and many more. The critical question is: Why is machine learning classified into so many categories? We need to know the primary machine-learning pipeline to solve a given problem to understand this.

General Machine Learning Pipeline

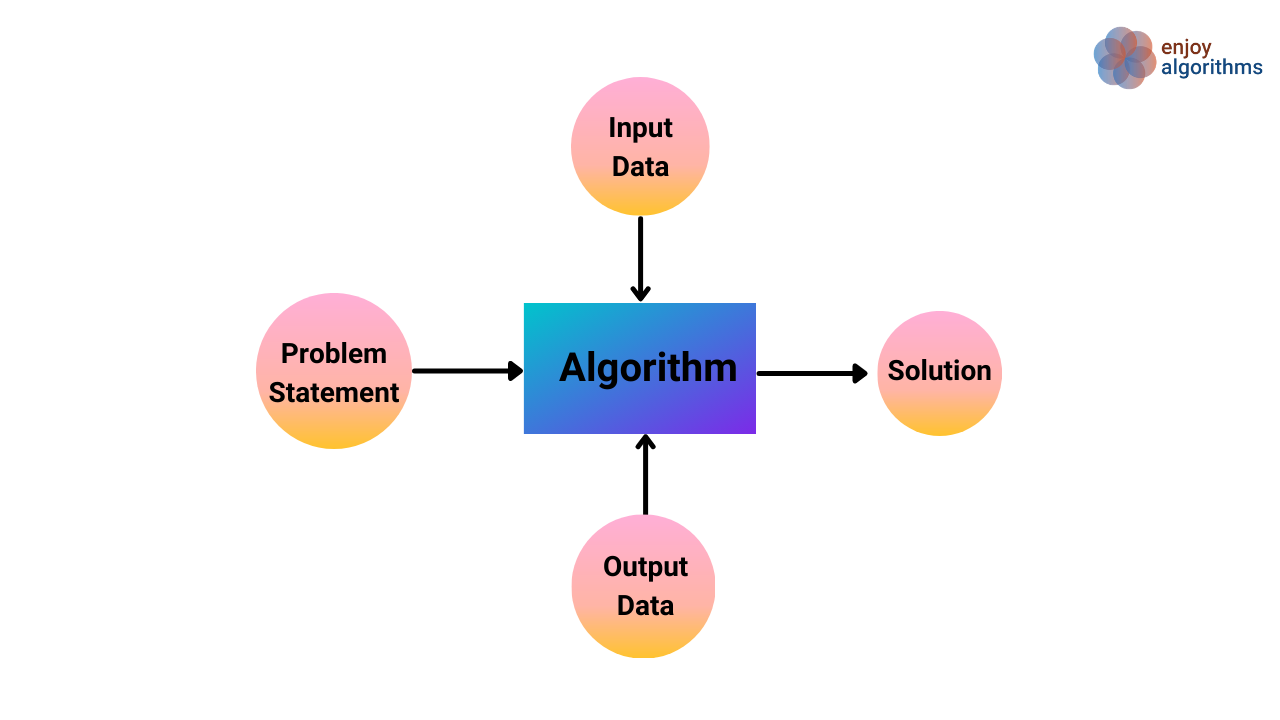

In Machine Learning, we first define a clear problem statement we want to solve using ML. Once it is defined, we try to find or create the dataset for that problem statement. Data consists of Input and true Target variables for which machines try to find the mapping function. After finalizing the dataset, we pick an appropriate Machine Learning algorithm to solve the problem statement. There are a tremendous amount of ML algorithms these days, and the choice and performance of these algorithms vary depending on the available data or the nature of the problem statement. Now, selected ML algorithms produce the desired output as a solution.

So, overall five key components play a critical role in the machine learning pipeline and become bases for the classification of ML algorithms:

- Based on the nature of the input data

- Based on the nature of the problem statement

- Based on the nature of the algorithm

- Based on the nature of the solution

- Based on the nature of output data

Let's discuss each of these categories in detail.

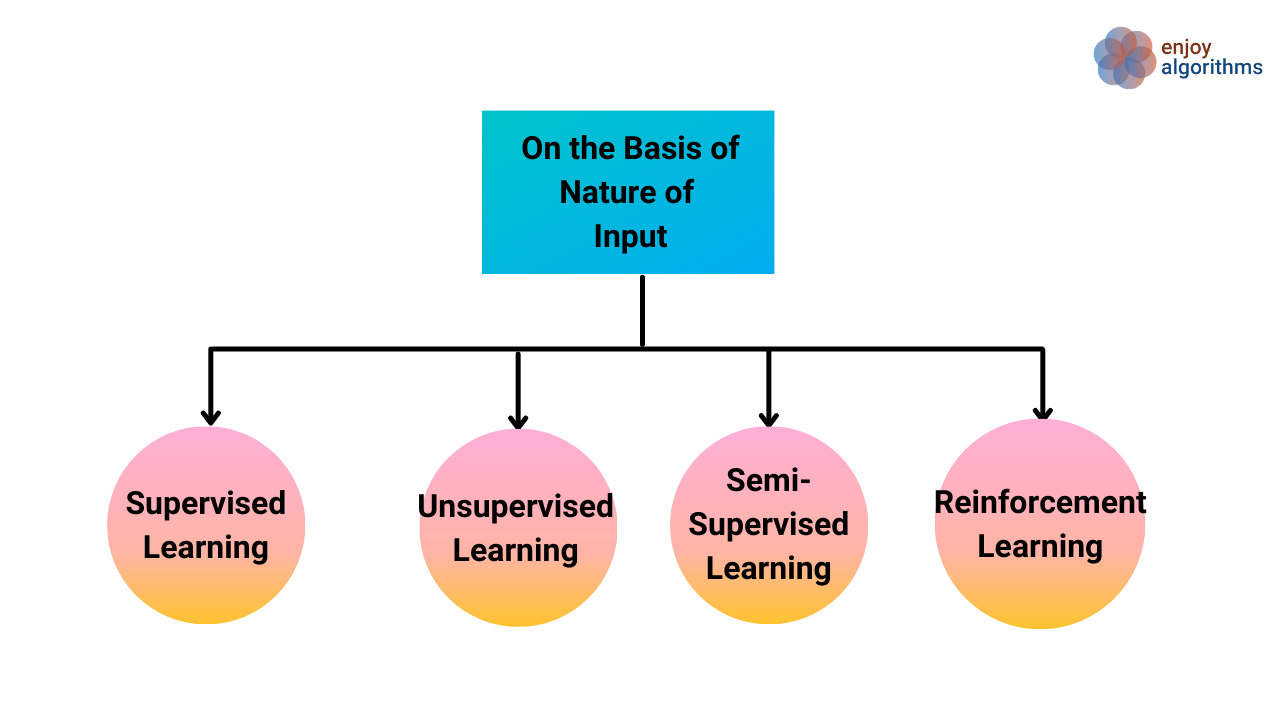

Types of machine learning models based on the nature of input data

Machine learning problems can be divided into four categories based on the input data type used to train the algorithms.

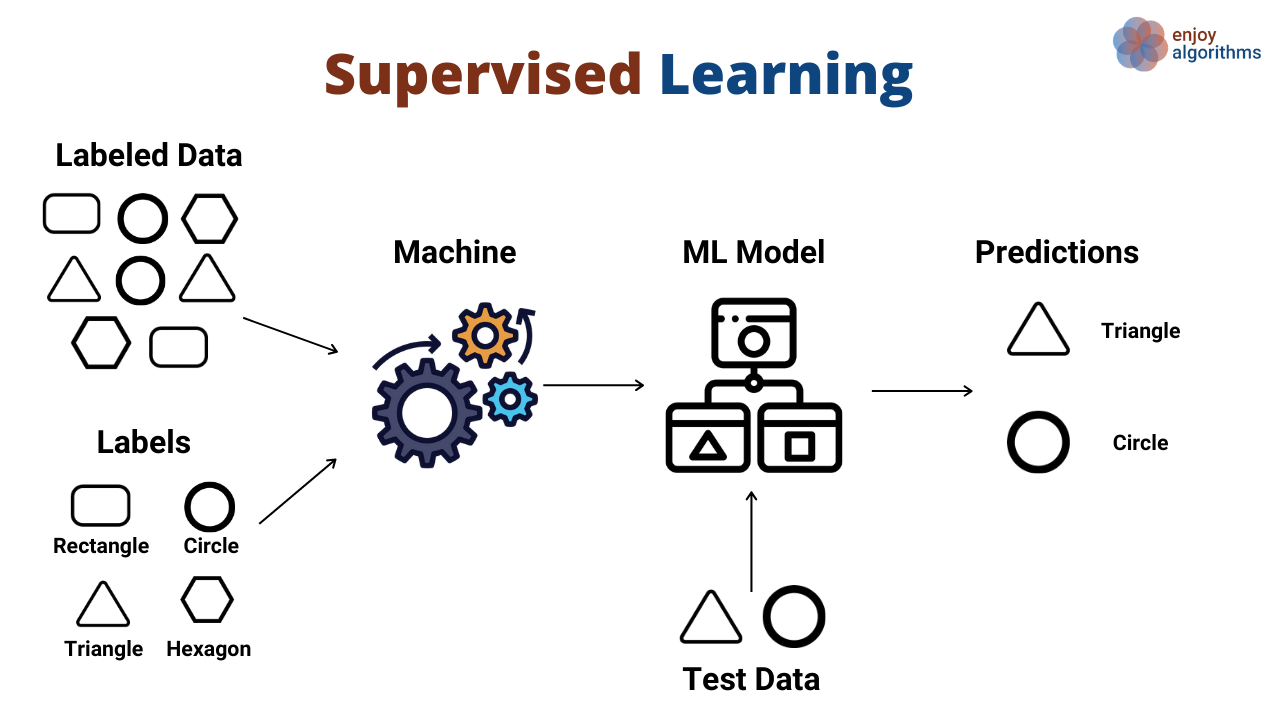

Supervised Learning

Supervised Learning involves having input variables (X) and an output variable (Y). We use a machine learning algorithm to learn the relationship between the input and output samples. It is called Supervised Learning because the training data acts like a teacher who guides the learning process. The figure below demonstrates this, where we provide explicit information as labels for the input data.

Some examples of supervised learning algorithms are Linear Regression, Logistic Regression, Decision Trees, Random Forest, Support Vector Machines (SVM), Naive Bayes Classifier, k-Nearest Neighbors (k-NN), Neural Networks (Multi-Layer Perceptron, Convolution Neural Networks, Recurrent Neural Networks), etc.

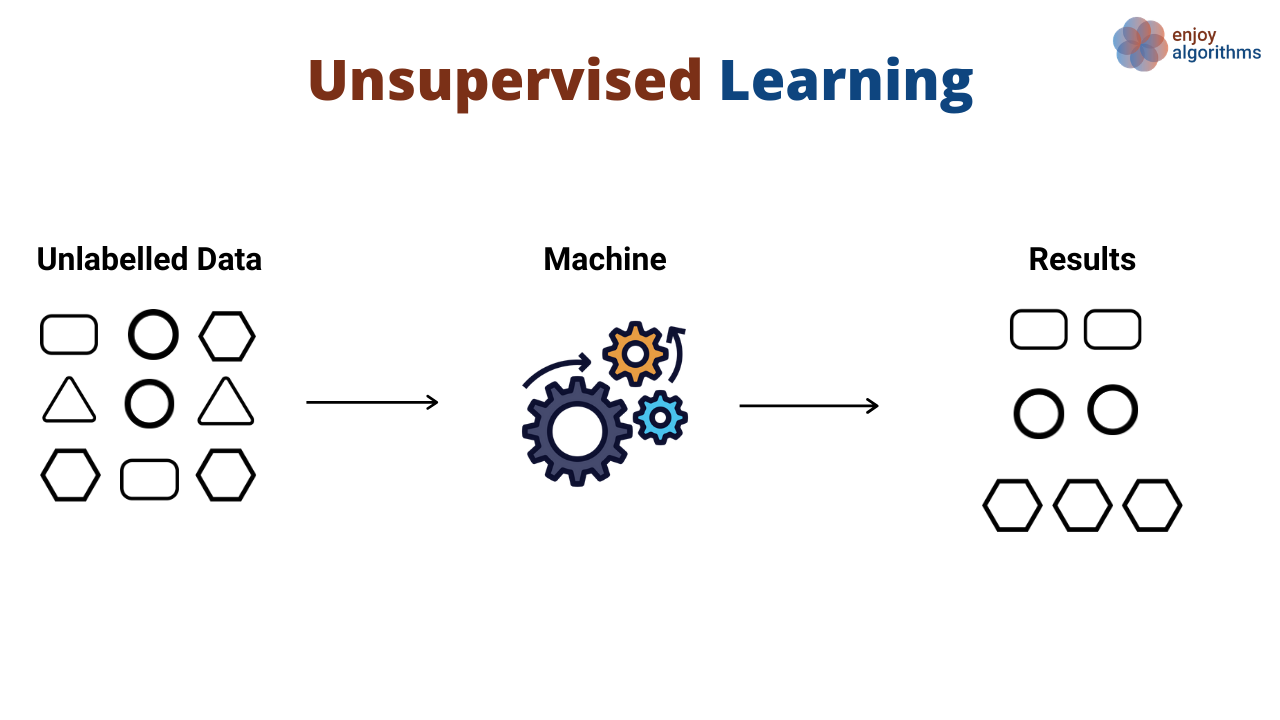

Unsupervised Learning

In Unsupervised Learning, only input data (X) is available, and no corresponding output label is present. No explicit output data is provided to guide the learning process, so the machine tries to find its own way to learn the mapping function. This is achieved internally by creating a pseudo output, such as similarities between data samples. Here machine tries to identify the underlying characteristics present among input samples as its output.

The unsupervised Learning approach is mainly used to dive deeper into data analysis. If we compare the above image with the image below, we don't have any information about the shape type of the input data. But our model can still segregate them based on their shape and size. This segregation, done by machines, can be termed Unsupervised Learning.

Some examples of unsupervised learning algorithms are K-Means Clustering, Hierarchical Clustering, Density-Based Clustering (DBScan), Apriori algorithm for association rule mining, Non-Negative Matrix Factorization (NMF), Principal Component Analysis (PCA).

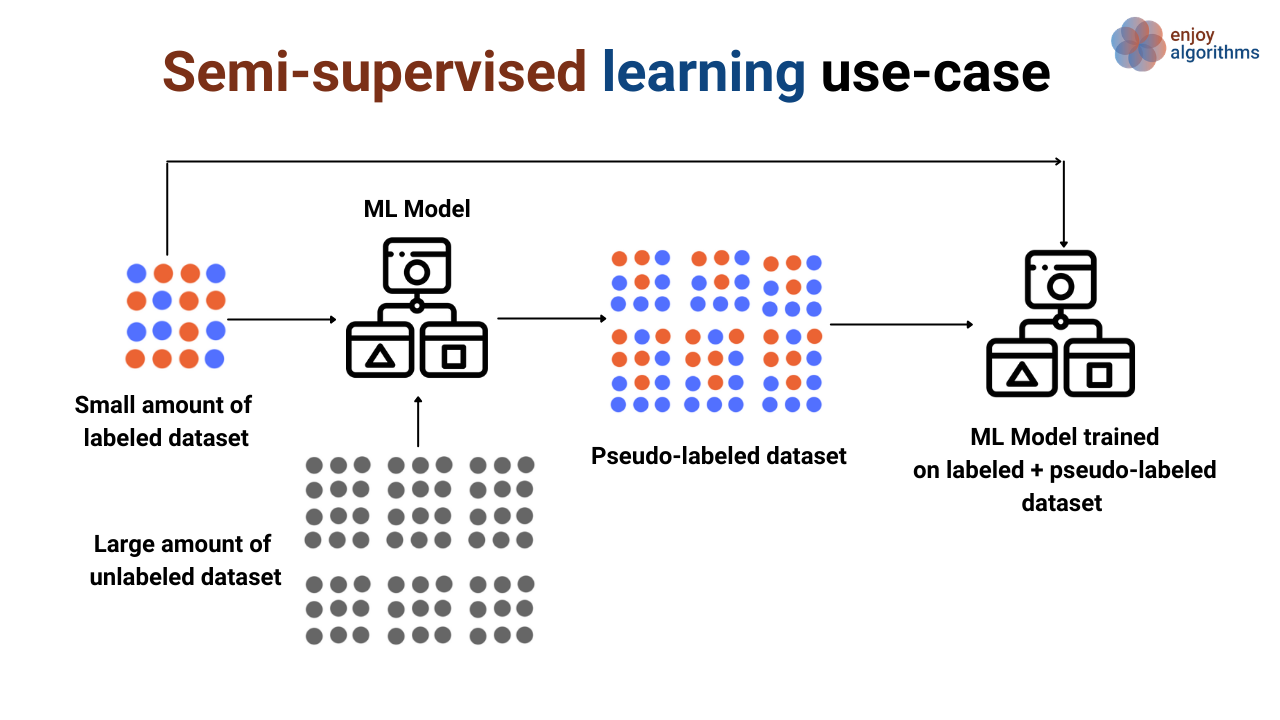

Semi-Supervised Learning

Semi-Supervised Learning involves problems where a large amount of input data (X) is available, but only a portion is labelled (Y). For example, a dataset of images where some are labelled and some are not.

In the previous example, this scenario falls under Semi-Supervised Learning if the input data has explicit labels for the circle and triangle but not for the rectangle and hexagon. Most real-world data lie in this category, as labelling data is time-consuming and requires expert human resources.

Examples of Semi-Supervised Learning algorithms are Self-training, Co-training, Multi-view learning, Transductive SVM, and Graph-based semi-supervised Learning.

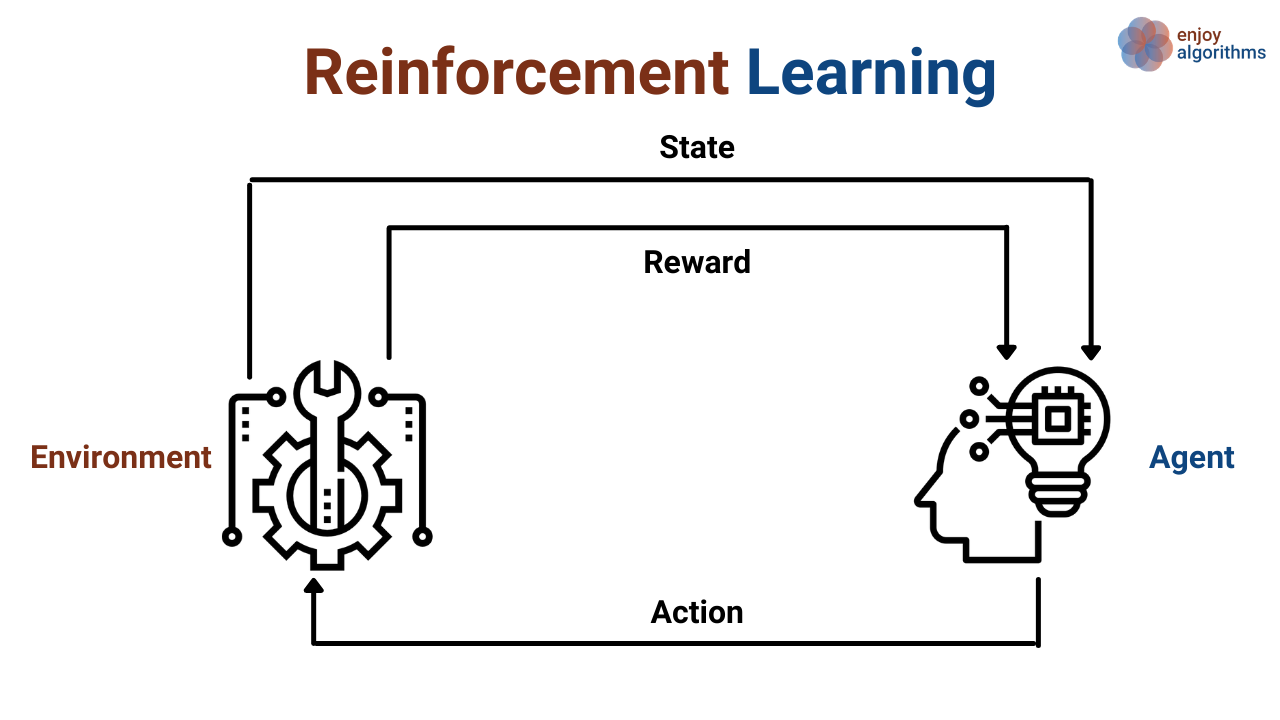

Reinforcement Learning (RL)

In Supervised Learning, we provide the learning algorithm with input-output pairs to train the model. In contrast, Reinforcement Learning involves training a machine agent to perform actions within a virtual environment to achieve a certain goal. For example, if we train a machine to play chess, the chessboard is the environment, and the machine is the agent.

The agent's movements determine the possible actions that the agent can take at any given stage. The agent selects the best action based on the environmental state and receives rewards or penalties based on its choice. The algorithm aims to maximize the rewards and minimize the penalties by making the best possible moves. The agent learns to improve its performance through repeated interactions with the environment.

Some other examples of Reinforcement Learning are:

- Training autonomous robots to navigate and perform tasks

- Optimizing advertisement placement and recommendation systems

- Controlling autonomous vehicles, such as self-driving cars

- Optimizing energy consumption in smart homes.

Some popular reinforcement learning algorithms are QNN, DQNN, etc.

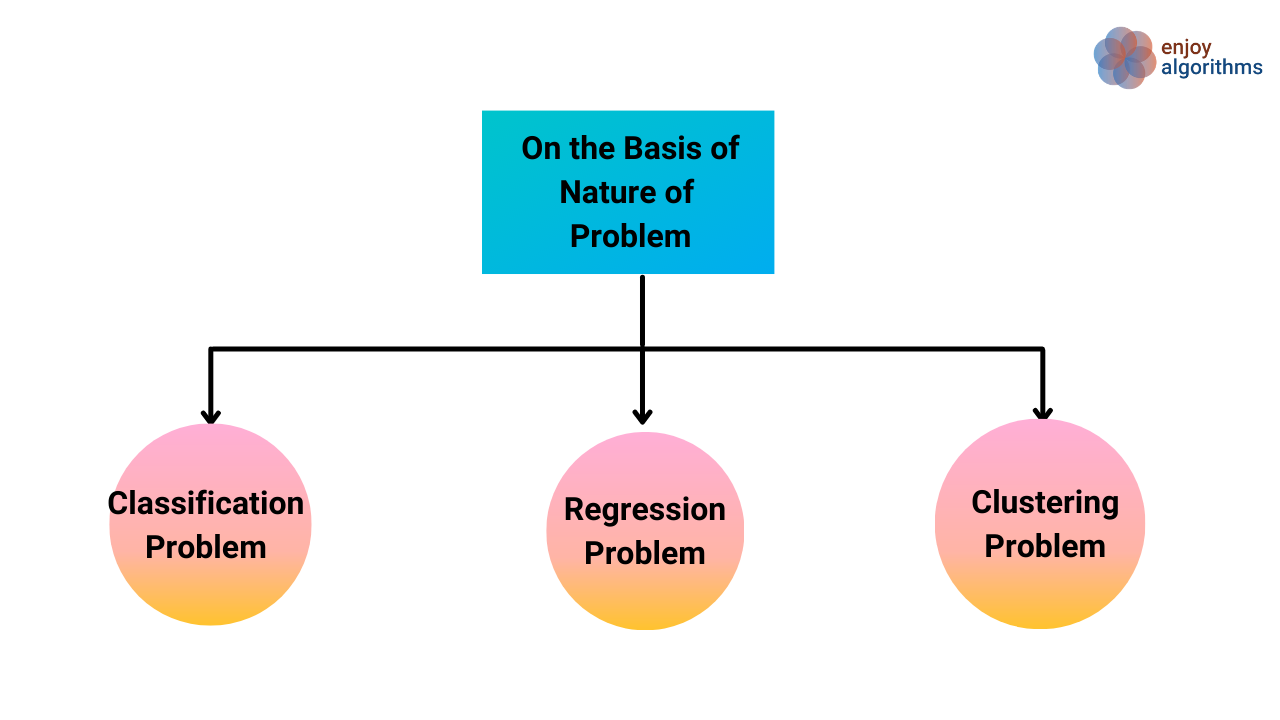

Types of machine learning models based on the nature of the problem

Machine learning problems can be classified into three categories based on the problem being solved.

Classification Problem

Classification is a machine-learning problem involving assigning class labels to input variables in a given problem domain. For example, classifying images into two categories, such as "Dog" and "Not a Dog", is a simple example of classification. The goal is to teach algorithms to accurately assign the appropriate class label based on the input data. Some other examples of classification problems are Spam email classification and Fraud detection in a financial transaction.

Regression Problem

Regression is a type of machine learning problem involving predicting continuous variables. A simple example of this would be predicting the temperature of a city, where the temperature can have any numerical value ranging from -50 to 50 degrees Celsius. Some other examples of Regression Problems are:

- Predicting housing prices based on location, square footage, and other factors.

- Forecasting stock prices based on past performance and economic indicators.

- Estimating the relationship between car performance and engine specifications.

- Determining the relationship between the weather and crop yields.

Clustering Problem

Clustering is a problem that involves using machine learning algorithms to divide a set of data samples into a specific number of groups. A straightforward example of this would be grouping lemons based on their size. It's important to note that while clustering resembles classification, the critical difference between these two algorithms is that classification is a form of supervised Learning, while clustering is unsupervised Learning.

Some examples of clustering problems are:

- Cluster customers into distinct groups based on the similarities of customer data.

- Divide the image into multiple segments, each corresponding to a different object or region in the image.

- Divide clusters of normal behaviour and data points that do not fit into any clusters (anomalies).

- Cluster stocks into groups based on their behaviour and performance.

Types of machine learning models based on the nature of the algorithm

Machine learning can be categorized into three types based on the nature of the algorithm used in the machine learning process.

Classical Machine Learning

Algorithms that use statistical and mathematical equations to analyze the relationships between input and output data fall under Statistical Machine Learning algorithms. These algorithms have the advantage of being explainable, i.e. they can provide reasons for the predictions made for a given input.

Some examples of Statistical Machine Learning algorithms include K-means, Decision Trees, Random Forests, Support Vector Machine (SVM), and Linear Regression.

Neural Networks

The structure and function of the human brain inspire Neural Network algorithms. These algorithms use a complex mathematical model with many trainable parameters, known as weight and bias matrices, learned through training data. Neural networks seem quite promising, but they have limitations when the complexity of the model increases. This can make capturing complex dependencies like temporal or spatial dependencies difficult.

Temporal dependencies refer to the dependence of the input sample at a time step (t1) on the input sample at another time step (t2). In contrast, spatial dependencies refer to the dependence of Input collected at one condition on Input collected at different conditions.

Deep Learning

The basic principle of deep Learning is similar to that of neural networks but with improved architecture to overcome the limitations of neural networks. Deep learning algorithms can learn spatial and temporal relationships within training data. However, the main disadvantage of these algorithms is that they are not easily explainable.

Some examples of deep learning algorithms include Convolutional Neural Networks (CNNs), Recurrent Neural Networks (RNNs), and Long Short-Term Memories (LSTMs).

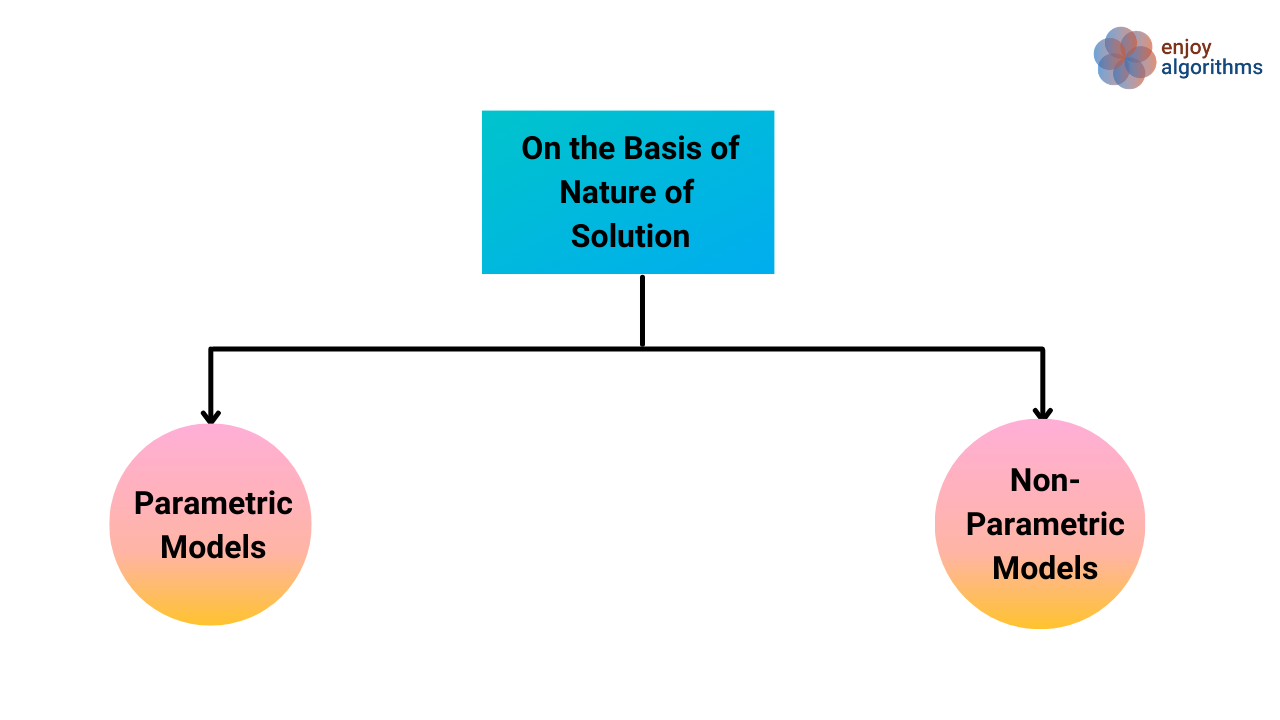

Types of machine learning models based on the nature of the solution

We can classify machine learning algorithms into two categories based on the nature of the solution.

Naturally, ML algorithms are designed to learn the historical input data, make inferences from that historical data, and predict the output for future inputs. To predict the output, the model can take two forms:

Parametric Models

Parametric models consider only future inputs to make predictions about the output. These models rely on patterns observed in the training data and assume the same patterns will apply to new, unseen data. Examples of parametric models include linear regression and neural networks.

In parametric models, a fixed number of parameters do not change based on the number of input samples. For example, when learning a straight-line function, the parameters that need to be learned are the "slope" and "intercept," which are always two in number. The values of these parameters may change, but the number of parameters remains constant.

Non-Parametric Models

In non-parametric models, output prediction depends on both the input features and the previous outputs predicted by the model. These models derive the predicted output value from the output values that existed in similar scenarios in the training data. Examples of non-parametric algorithms include K-Nearest Neighbor and Decision Trees. KNN remembers all the samples in the training dataset, so the learnable parameters will also increase if we increase the number of input samples.

It is a common misconception that non-parametric models have no parameters. However, this is different. Non-parametric models have parameters, but the number of parameters depends on the input samples. If the number of samples increases or decreases, the number of parameters that need to be learned will also change.

Types of machine learning models based on the nature of output data

Machine learning can be classified into two categories based on the nature of the output.

Probabilistic Models

Probabilistic models produce output in probabilities, indicating confidence in the prediction. For example, in a classification problem, algorithms predict a label with a certain degree of confidence. Let's say a model looks at an image and reports that there is a 60% chance that a dog is present in the image. CNNs are one example of a probabilistic model.

In probabilistic models, we establish decision boundaries. If the model's confidence is greater than a certain threshold, such as 60%, then we consider that the model has predicted that class. Otherwise, the model did not predict that class. The value of this threshold can be adjusted according to the user's needs.

Non-Probabilistic Models

This category of models predicts the output but does not provide a measure to know the prediction quality. However, there are external methods to determine the error between the predicted and actual values. Decision Trees and Support Vector Machines (SVM) are examples of models that fall under this category.

In regression problems, the model directly provides the desired output in continuous form but does not indicate its confidence level in the prediction. For example, if the model predicts that tomorrow's temperature will be 24.7 degrees Celsius, it does not specify its confidence in that prediction.

Critical questions to explore

- How can the same model be classified as both a supervised model and a classification model?

- What distinguishes supervised Learning from unsupervised Learning?

- Can you explain the difference between classification and clustering?

- How does Reinforcement Learning differ from supervised, unsupervised, and semi-supervised Learning?

- How are Deep Learning and Neural Networks different?

Conclusion

Having a clear understanding of the different types of machine learning algorithms is crucial for the success of a machine learning project. Each algorithm has its strengths, weaknesses, and areas of applicability. Understanding these differences helps to select the most appropriate algorithm for a given problem and avoid common mistakes.

References: Machine Intelligence by Suresh Samudrala

Enjoy Machine Learning, Enjoy Algorithms!