Top Historical Facts in Machine Learning

To learn a new subject, we should try to know how exactly that started. Every computer science field has a different history, reflecting the challenges that earlier researchers faced and making our journey easy. This article will discuss the 10 most interesting historical facts considered the turning points in AI and Machine Learning history.

Creation of machine learning algorithm to play checkers

In 1952, Arthur Samuel joined IBM’s Poughkeepsie Laboratory and started working on the first Machine Learning algorithm to play computer games. In 1955, he successfully created an ML algorithm using heuristic search memory to learn from past experiences. In the mid-1970s, advanced versions of this algorithm became capable of defeating champions.

Discovery of perceptron

An American psychologist, Frank Rosenblatt, created the first neural network using a potentiometer, named perceptron or mark-1 perceptron. He was trying to solve the visual recognition task. This event created hype in the public and The NewYork Times published: “the Navy [has] revealed the embryo of an electronic computer today that it expects will be able to walk, talk, see, write, reproduce itself and be conscious of its existence.” But sooner, people noticed the limitations as it failed to learn the simple XOR gate.

Mark I Perceptron displayed at the Smithsonian museum.

Book on fundamental limits of the perceptron

An American computer scientist, Marvin Lee Minsky, the Co-founder of MIT’s AI laboratory, published a book named Perceptrons. He described the fundamental limits of perceptron and 2 layers of Neural Networks. This book showed the hope for programmers to overcome the limitations and build various advanced algorithms around them. This was a bummer.

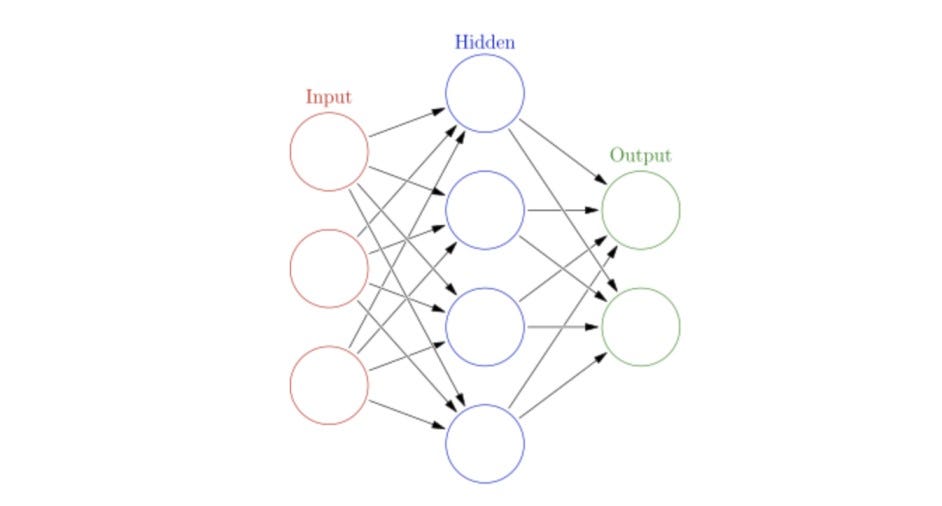

Rediscovery of backpropagation

Initial perceptions were unable to learn simple non-linear functions. In the 1980s, several people discovered the concept of backpropagation independently with various levels of mathematical inclusion. These theories allowed researchers to include one or more hidden layers between input and output layers of Neural Networks, which made NN stronger. But this strength was at the cost of computational complexity. There was a limitation of computational power, so people could not include more hidden layers. But this was the start point where people believed that NN could be used in commercial applications.

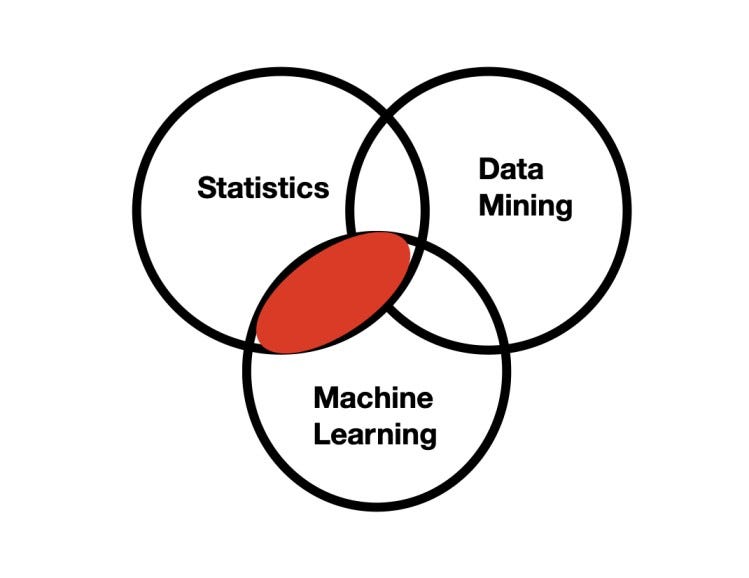

Statistical approaches in machine learning

Because of its less explainable nature, lesser theories, and demand for higher computational power, Neural Networks research had become idle at the start of the 1990s. Eventually, it started losing its charm (again).

Meanwhile, new statistical algorithms based on SVMs (Support Vector Machine) came into the picture using which programmers could achieve better performance.

Deep Blue defeated chess-champion

Deep Blue was the first chess-playing machine learning-based system created by IBM. In 1997, Deep Blue defeated world chess champion, Garry Kasparov.

ImageNet classification and computer vision

In 2009, a computer science professor at Stanford University named Fei-Fei Li created a large visual dataset that reflects the real world. This was the best move to popularise ML through the computer science community. Many historical research works were based on this dataset. In 2012, Alex Krizhevsky and others published a very influential research paper in which he designed a type of Convolutional Neural Network (CNN), famously known as AlexNet, which decreased the error rate in image recognition drastically. This paper is considered the beginning of history. Later based on this work, many new networks came into the market.

DeepFace algorithm by facebook

In 2014, a research team of Facebook created the DeepFace algorithm that created history and beat all the previous records to recognize human faces by computer algorithms. The accuracy was 97.35%(approx.), which was 27% higher than the earlier best methods. You can consider this accuracy as close as humans.

DeepMind algorithm by google

Google researchers created an ML algorithm, named DeepMind, to play GO (an ancient game of China) against Lee Sedol, undefeated for over a decade and the world’s top player. There were 5 rounds of matches in which DeepMind defeated Lee 4 out of 5 times.

Waymo fully autonomous taxi in Phoenix

In 2017, Google’s Waymo introduced fully autonomous taxis in Phoenix, which uses ML algorithms for every execution step. Waymo created history by creating this whole big product using the concepts of the machine and deep learning.

We hope you enjoyed the article. If you have any queries/doubts/feedback, please share in the message below or write us at contact@enjoyalgorithms.com. Enjoy learning, Enjoy algorithms!