Introduction to Distributed System Concepts

What is a distributed system?

A distributed system is a network of independent software components or machines that function as a unified system for the end user. Here several computers (nodes) communicate with one another and share resources to complete tasks that can be complex or time-consuming for a single machine to handle.

On other side, due to the decentralized nature, distributed systems can handle a high volume of requests and serve millions of users. They operate in parallel i.e. even if one component fails, it does not impact the performance of the entire system.

For example, traditional databases stored on a single machine may experience difficulties in performing read and write operations as the amount of data grows. One way to resolve this issue is to split the database system across multiple machines. If read traffic is much higher than write traffic, we can also implement master-slave replication, where read and write requests are processed on separate machines.

Overall, scalability, performance, reliability, and availability are four key characteristics for designing distributed systems. Let's move forward to discuss each one of them!

Scalability

Distributed systems can continuously evolve to support growing workloads like handling a large number of requests or a large number of database transactions. So one of the key characteristics of a distributed system is to achieve high scalability without decrease in performance.

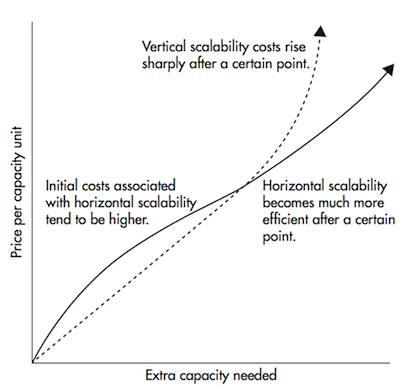

Traditionally, we can scale using vertical scaling. Here we increase system capacity by adding more hardware resources (CPU, RAM, etc.) to an existing server. With an increase in scale, this can be expensive and prone to a single point of failure. The idea is simple: It is limited by the capacity of a single server, and scaling beyond that capacity often requires downtime.

On the other hand, we can easily increase the capacity of an existing system by using horizontal scaling. Here idea is to add more servers to the pool. So, if there is any performance degradation due to a large number of requests, we can simply add more machines. Horizontal scaling is a common approach for distributed systems because it makes the system faster with minimal cost compared to vertical scaling.

Performance

There are two standard parameters to measure the performance of a distributed system:

- Latency or response time: Delay in obtaining the response to a request.

- Throughput: Number of requests served in a given time.

These factors are related to the volume of responses sent by nodes and the size of responses. On the other side, performance also depends on factors like network load, architecture of software and hardware components, etc.

Many scalable services are read-heavy, which can decrease system performance. To address this issue, one solution is to use replication. To further increase performance, distributed systems can use sharding by dividing the central database server into smaller servers called shards. This will improve performance by distributing the load across multiple servers.

Scalability vs Performance

Scalability and performance are related, but not the same thing. Performance measures how quickly a system can process a request, while scalability measures how much a system can grow or shrink. For example, consider a system with 200 concurrent users, each sending a request every 5 seconds (on average). In this situation, the system would need to handle a throughput of 40 requests/sec. So the performance determine how much time it takes to serve 40 requests/sec, while the scalability determine how many additional users the system can handle and how many more requests it can serve without degrading the user experience.

Reliability

A distributed system is reliable if it can continue delivering its services even when one or more components fail. In such systems, another healthy machine can always replace a failing machine. For example, an e-commerce store needs to ensure that user transactions are never cancelled due to a machine failure. If a user adds an item to their shopping cart, the system must not lose that data. Here reliability can be achieved through redundancy i.e. if the server hosting the user's shopping cart fails, another server with an exact replica should take its place. So there is a cost associated with high reliable systems.

How important is reliability?

Bugs or outages of critical applications can lead to lost productivity and significant costs in terms of lost revenue. Even in noncritical applications, businesses have a responsibility towards their users. Suppose a customer stored their important data in an application. After some time, that database suddenly got corrupted and there is no mechanism to restore it from a backup. What would happen? This would likely result in a loss of trust. So it is important to ensure the reliability of the application to avoid these issues.

Availability

Availability refers to the percentage of time that a system or service is operational under normal conditions. It is a measure of how often a system is available for use. In other words, an application that can continue to function for several months without experiencing downtime can be considered highly available.

For example, in a company like Amazon, internal services are described in terms of the 99.9th percentile for availability. This means that 99.9% of requests are expected to be served without issue. While this only affects 1 in 1,000 requests, it is important to prioritize these requests because they are likely from the company's most valuable customers, who have made many purchases and have a lot of data on their accounts.

Reliability vs Availability

If a system is reliable, it is available. However, if it is available, it is not necessarily reliable. In other words, high reliability contributes to high availability. Still, achieving high availability even with an unreliable system is possible by minimising maintenance time and ensuring that machines are always available when needed.

For example, suppose a system has 99.99% availability for the first two years after its launch. Unfortunately, the system was launched without any security testing. Customers are happy, but they never realize that system is vulnerable to security risks. In the third year, the system experiences a series of security problems that suddenly result in low availability for long periods. This may result in financial loss to the customers.

Other key characteristics of a distributed system

- Manageability: How it is easy to operate and maintain the system. If it takes a lot of time to fix system failures, availability will decrease. So early detection of faults can reduce or prevent downtime.

- Concurrency: Ability of multiple components to access and update shared resources concurrently without interference. Concurrency reduces latency and increases the throughput.

- Transparency: Allows users to view a distributed system as a single, logical device, without needing to be aware of the system architecture. This is an abstraction where a distributed system consisting multiple components, appears as a single system to the end user.

- Openness: Ability to update and scale a distributed system independently. This is about how easy it is to integrate new components or replace existing ones without affecting the overall environment.

- Security: It is crucial because users often send requests to access sensitive data managed by servers. For example, doctors may request records from hospitals, and users may purchase items through an e-commerce website. To prevent denial of service attacks and ensure security, distributed systems must use secure authentication processes.

- Heterogeneity: Distributed system components may have a variety of differences in terms of networks, hardware, operating systems, programming languages, and implementations by different developers.

Types of Distributed Systems

One way to classify distributed systems is based on their architecture, which describes the way the components are organized and interact with each other. Some of the common architectures are:

Client-Server Architecture: Most traditional and widely used architecture. In this model, clients send requests to a central server, which processes and returns the requested information. An example is an email system where client is an email software (Gmail) and the server is Google Mail Server.

Peer-to-Peer (P2P) Architecture: In P2P, there are no centralized machines. Each component acts as an independent server and carries out its assigned tasks. Responsibilities are shared among multiple servers called peers, who collaborate to achieve a common goal. An example is file-sharing networks like BitTorrent, where every user acts as both a client and a server. Users can upload and download files directly from each other's computers, without the need for a central server.

Advantages of distributed systems

- Reliability: They remain available most of the time, irrespective of the failure of any particular system server. If one server fails, the service remains operational.

- Scalability: Using horizontal scalability, we can add a large number of servers independently.

- Low latency: We can use replication and place servers close to the user location to reduce the latency.

- Cost-effective: Compared to a single-machine system, the distributed system is made up of several machines together. Although such systems have high implementation costs, they are far more cost-effective when working on a large scale.

- Efficiency: Distributed systems are efficient in every aspect since they possess multiple machines. Each of these computers could work independently to solve problems.

Disadvantages of Distributed Systems

- Complexity: Distributed systems are highly complex. Although using a large number of machines, the system can become scalable, but it increases the system’s complexity. There will be more messages, network calls, devices, user requests, etc.

- Network failure: Distributed systems heavily depend on network calls for communications and transferring data. In case of network failure, message mismatch or incorrect ordering of segments leads to communication failure and eventually deteriorates its application’s overall performance.

- Consistency: Because of its complex nature, it becomes challenging to synchronise the application states and manage the data integrity in the service.

- Management: Many functions, such as load balancing, monitoring, increased intelligence, logging, etc., need to be added to prevent the distributed systems’ failures.

Conclusion

Distributed systems are a necessity of the modern world as new machines need to be added, and applications need to scale to deal with technological advancements and better services. It enables modern systems to offer highly scalable, reliable, and fast services.

If you have any queries or feedback, please write us at contact@enjoyalgorithms.com. Enjoy learning, Enjoy system design!