Machine Learning Interview

- Learn fundamentals of machine learning

- Learn to build machine learning projects

- Learn critical guidance for ml interviews

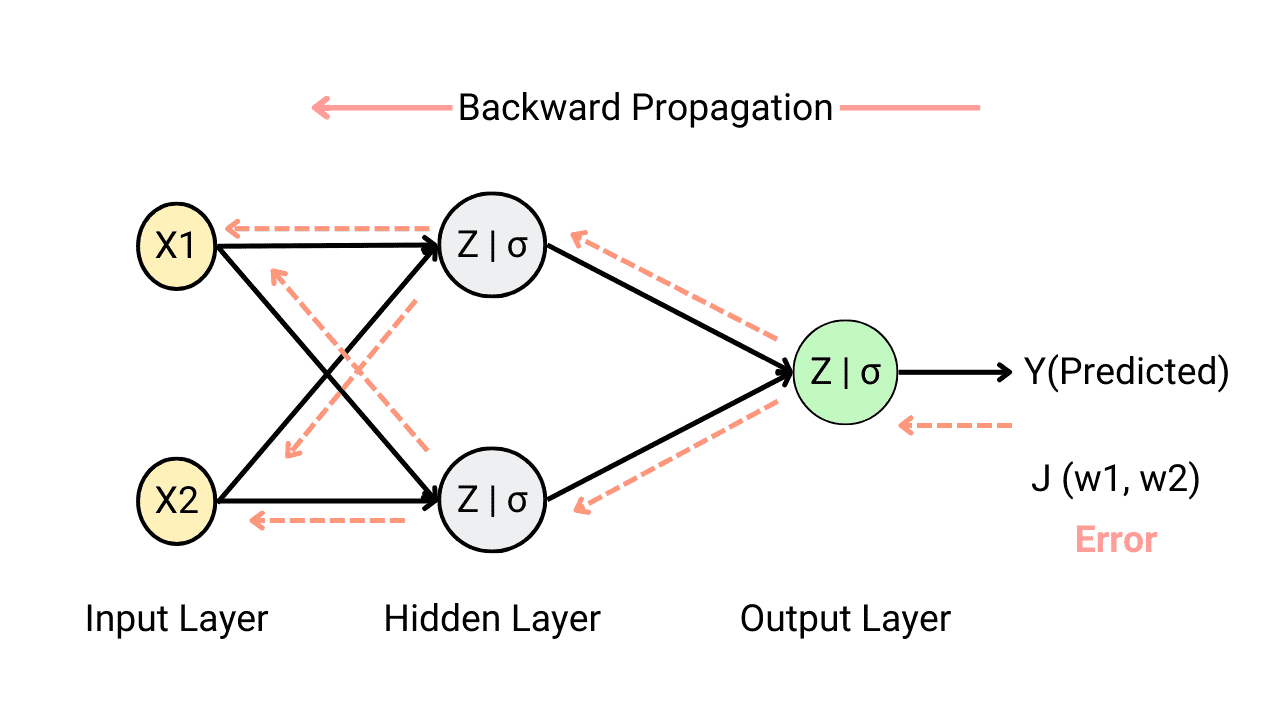

What is backpropagation in neural networks and why do we need it?

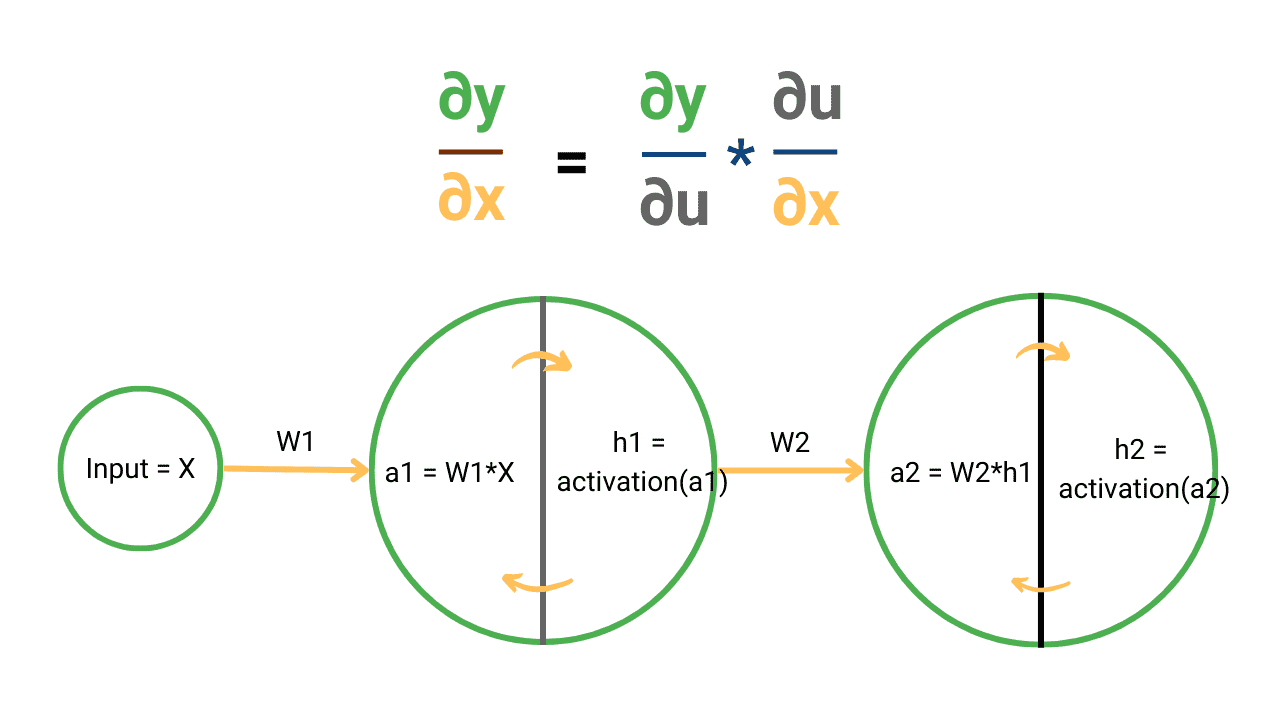

Calculus Chain Rule for Neural Networks and Deep Learning

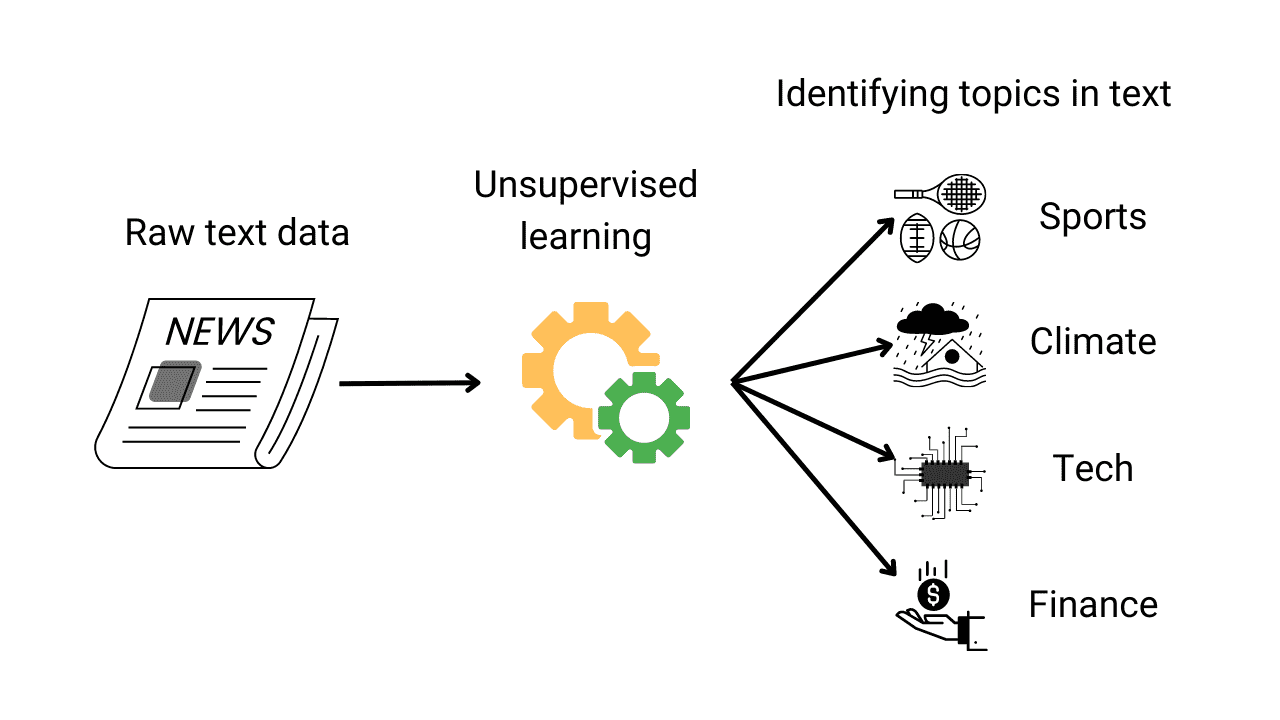

Topic Modelling using LDA and LSA with Python Implementation

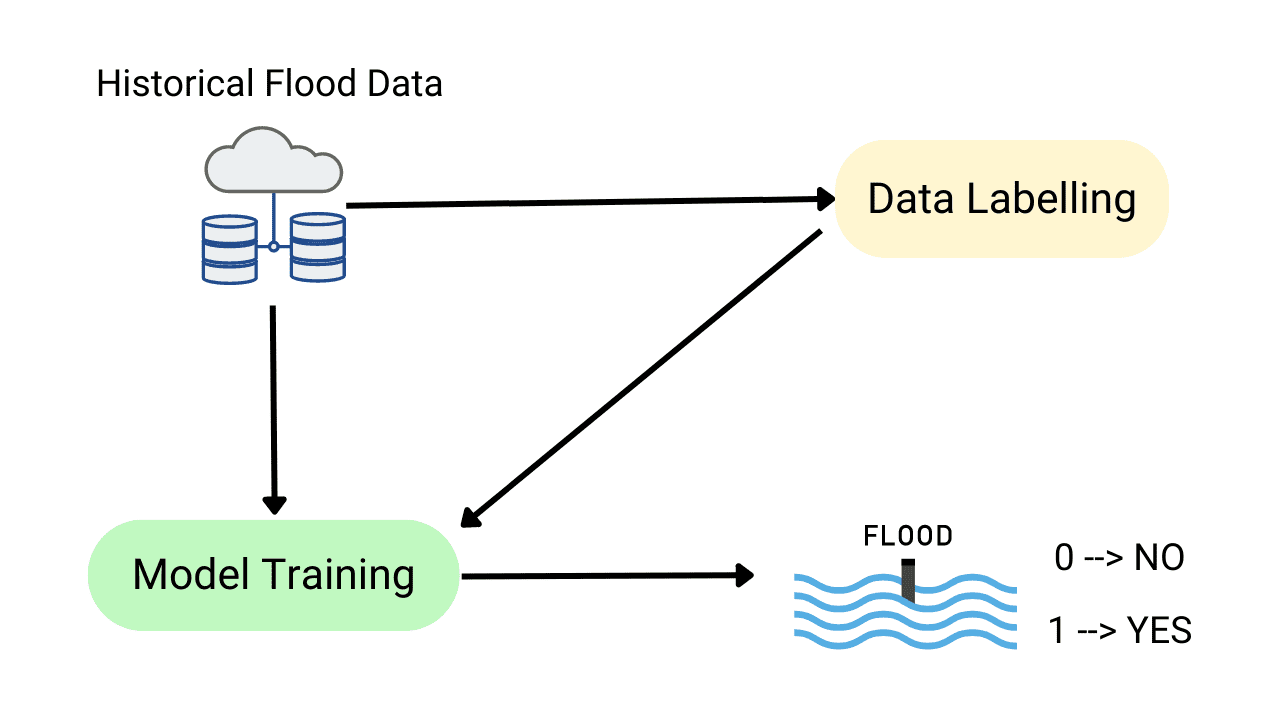

Flood Prediction using Machine Learning Models

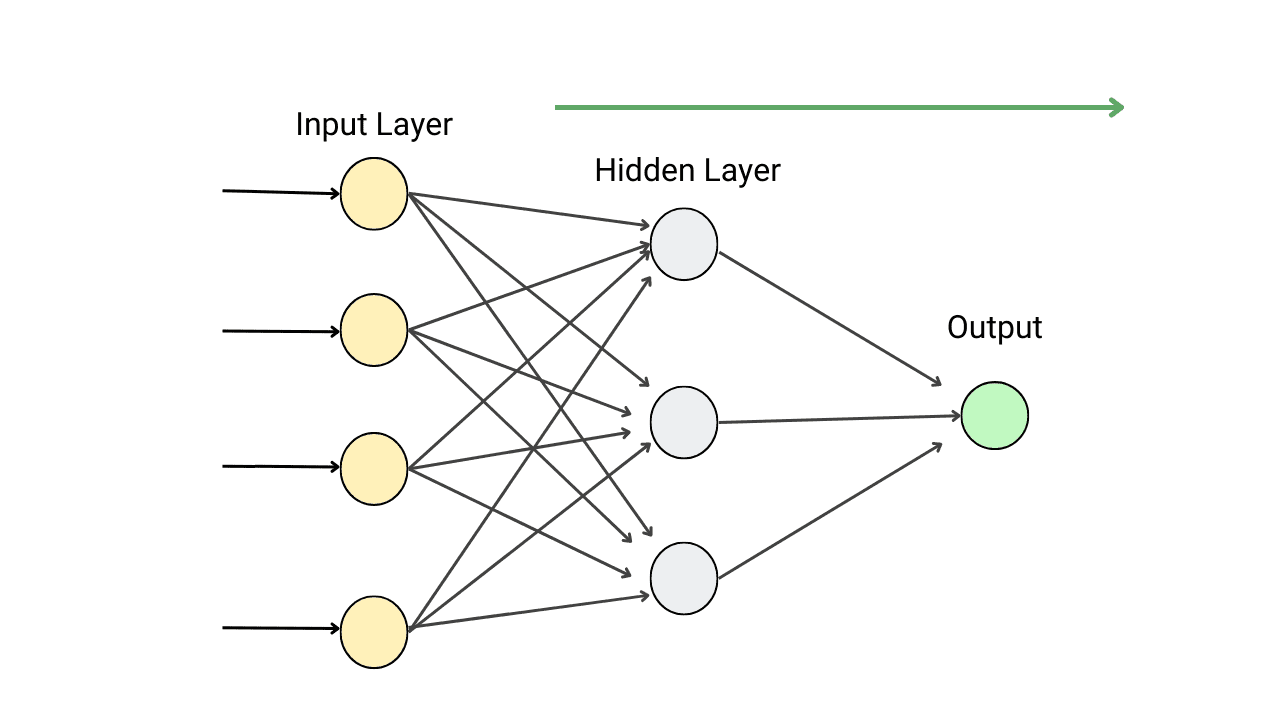

How Forward Propagation Works in Neural Networks?

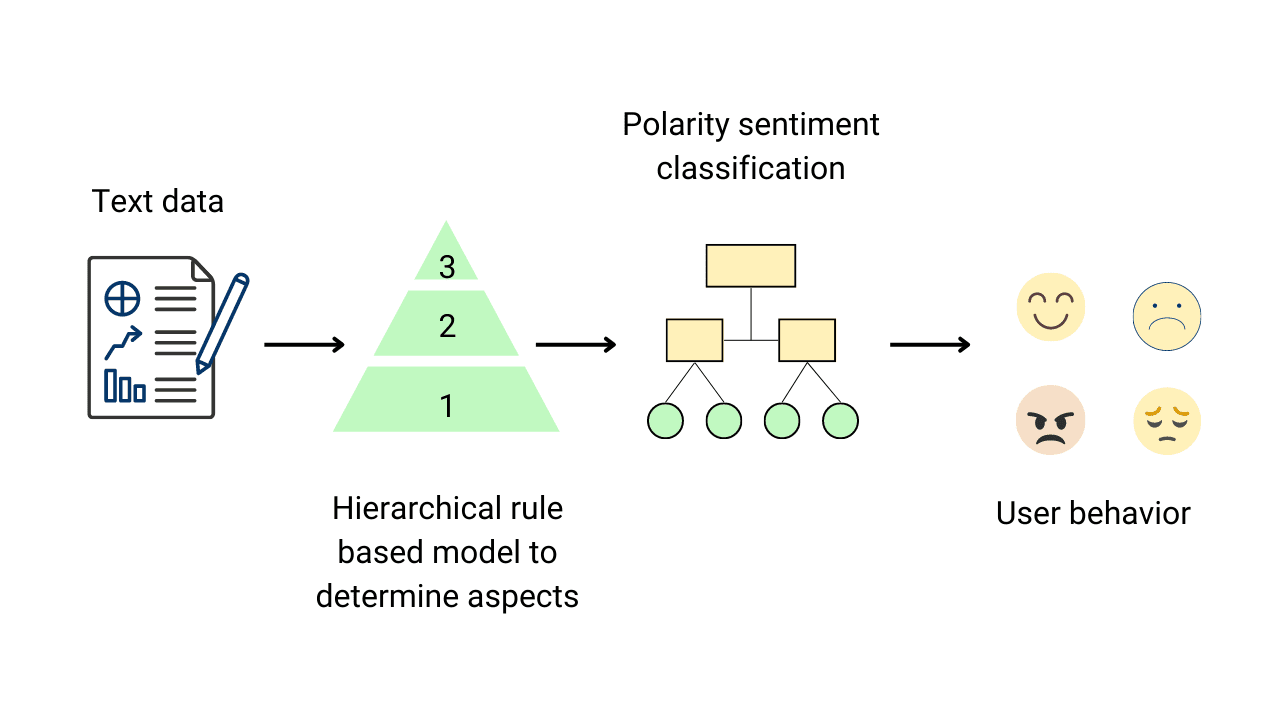

Aspect Based Sentiment Analysis (ABSA) in Python