Caching Strategy: Cache-Aside Pattern

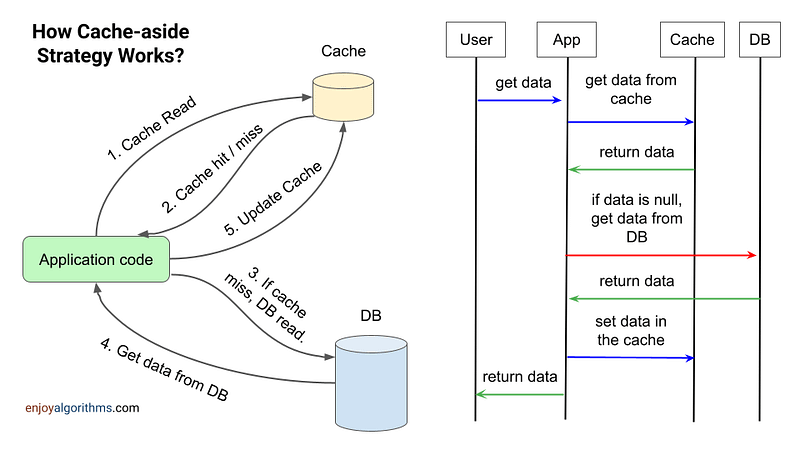

Cache-aside is one of the commonly used caching strategies, where cache and database are independent, and it is the responsibility of the application code to manage cache and database to maintain data consistency. Let’s understand this from another perspective!

Some applications use read-through or write-through patterns, where cache system defines the logic for updating or invalidating the cache and works as a transparent interface to the application. If the cache system does not provide these features, it is the responsibility of the application code to manage cache lookups, cache updates on writes, and database queries on cache misses.

This is where the cache-aside pattern comes into the picture: the application interacts with the cache and database, and the cache doesn’t interact with the database at all. So cache is “kept aside” as a scalable in-memory data store.

Read operation in cache-aside pattern

For reading the data, application first checks the cache. If data is available (cache hit), the cached data will be returned directly to the application. If data is not available (cache miss), application code will retrieve data from the database, update the cache, and return data to the user.

Write operation in cache-aside pattern

Now, the critical question is: How do we perform write operations? For writing data (add, modify, or delete), we can think about these two approaches.

Writing to the database and Invalidating the cache (Similar to write-around)

One approach is to update the database and invalidate or evict corresponding data in the cache (if it exists in the cache). This will ensure that the next read operation will retrieve updated data from the database. So, when there is a need to read the same data again, there will be a cache miss, and application will retrieve data from the database and add it to the cache.

Here is one observation: After updating the database, data will not be stored in the cache until there is a next read request for the same data. So this strategy will load data on-demand into the cache (lazy-loading). It makes no assumptions about which data an application will require in advance.

- As highlighted above, data is not immediately updated in the cache, which can result in a cache miss for the next read. This will introduce additional latency. But subsequent read requests for the same data can be served efficiently from the cache. In simple words, this strategy can be beneficial if read-to-write ratio is very high or application re-reads the same data frequently after database write.

- There can be a chance of increased load on the database. For example, if multiple requests trigger cache misses simultaneously, it could lead to a spike in database queries.

There is a critical question: Can we first invalidate the cache, and then write to the database? If we invalidate the cache first, there is a small window of time when a user might fetch the data before the database is updated. So this will result in a cache miss (because data was removed from the cache), and earlier version of data will be fetched from the database and added to the cache. The can result in stale cache data.

Writing to the database and updating the cache immediately (Similar to write-through)

This idea is similar to the write-through strategy: After updating the database, application also update cache with the latest modified data. This will improve subsequent read performance, reduce the likelihood of stale data, and ensure data consistency between cache and database.

- However, this will increase write latency because data needs to be written to both cache and database. Nonetheless, this overhead can be acceptable in systems with read-heavy workloads.

- Since cache is updated with the latest data after database update, subsequent read requests are more likely to hit the cache, thereby reducing the number of read requests to the database.

- This approach is commonly used in systems that prioritize data integrity.

Advantages of cache-aside pattern

- Provides flexibility and control to the application code. Due to this, application developers can optimize caching based on the specific needs of the application and decide what data is cached and for how long.

- Cache-aside pattern is resilient to the failure of the cache layer. If the cache layer fails, application can still access data from the database.

- As the application grows and more resources are needed to handle increased traffic, we can add more caching servers to distribute the load. In other words, in the cache-aside pattern, we can easily scale the cache layer independently of the database.

- Due to separation of cache and database, we can use different data models in cache and database.

- With write-around pattern, cache-aside pattern can load data on demand into the cache from database. Due to this, cache can only contain data that application actually requests, which helps in the optimal use of cache capacity.

- With write-through pattern, cache-aside pattern can ensure that the data in the cache is up to date as far as possible. In other words, the risk of serving outdated or inconsistent data from the cache is reduced by this hybrid strategy.

- Anybody can understand the execution flow of the code. On the other side, cache provider requirements are as minimum as possible. Due to this, one can easily migrate from one to another cache provider.

- This can be a good option for scenarios where data access patterns are not easily predictable.

Limitations of cache-aside pattern

- The responsibility of managing the cache in application code can bring some challenges. Developers need to ensure proper cache handling, including cache invalidation, eviction policies, and handling concurrent requests. This can add complexity and increase the risk of bugs or errors.

- When using lazy loading, data is loaded into the cache only after a cache miss. So if there are frequent updates in the database, there will be frequent cache misses.

- When using cache-aside with write-through, there will be high latency for the write because data will be updated in both cache and the database. So this strategy will be inefficient for write-heavy workloads. On the other side, we are writing at both places, this can fill the cache with undesired data. This may lead to high cache miss, inefficient use of cache or evictions of some frequently used data. How to handle this scenario? Explore and think!

In the cache-aside pattern, applications can ensure that the cache data is up to date as far as possible. But in a practical scenario, cached data can be inconsistent with the data in database. For example, an item in the database can be changed at any time by some external process, and this change might not be reflected in the cache until the next read of the same item. This can be a major issue in the system that replicates data across data stores and synchronization occurs frequently.

So application developers should use proper cache invalidation schemes or expiration policies to handle the data inconsistency. Here is another example: Suppose you have successfully updated the cache but database fails to update. So the code needs to implement retries. In the worst case, during unsuccessful retries, cache contains a value that database doesn’t.

Cache-aside as a Local (in-memory) Cache

If an application repeatedly accesses the same data, cache-aside can be used in the local (in-memory) caching environment. Here local cache is private to each application instance. Here is a challenge: If multiple instances are dealing with the same data, each instance might store its copy of data in its local cache. Due to this, cached data across instances could become inconsistent. For example, if one instance updates the shared database, the other instance's caches won’t be automatically updated, resulting in stale or outdated data being used.

To address this limitation of local caching, we recommend exploring the use of distributed caching. In this caching, system maintains a centralized cache accessible to all instances of the application. Due to this, all instances can read and update the same cached data (data consistency). If required, we can also spread cache across multiple nodes (improves scalability and fault tolerance).

Lifetime of cached data and cache expiration policy

What will happen when cache data is not accessed for a specified period? As discussed above, there can be a chance that data become stale. To solve this inconsistency problem, one solution is to implement expiration policy in the application code.

In cache expiration policy, each item in the cache is associated with a time-to-live (TTL) value, which specifies how long the item can remain in the cache before it is considered expired. When the data expires, the application can be forced to fetch the latest data from the main data source and update the cache with the fresh data. By doing this on consistent time intervals, application can ensure that data is up-to-date.

But it may result in unnecessary evictions if data remains valid for a longer period than TTL. So the question is: What should be the best value of the TTL? For this, we need to ensure that the expiration policy should match the access pattern of data in the application.

- If TTL is too short, there will be a lot of cache misses and applications will frequently need to retrieve data from the database. In other words, low TTL can reduce chances of inconsistencies but can result in high latencies and reduced performance.

- If TTL is too long, then cached data is likely to become stale. In other words, large TTL will ensure system performance but increases the chances of inconsistencies.

Two ideas are important to determine appropriate TTL value: 1) Understanding the rate of change of the underlying data. 2) Evaluating the risk of outdated data being returned to your application. For example, we can cache static data (rarely updated data) for longer TTL and dynamic data (that changes often) for smaller TTL. This lowers the risk of returning outdated data while still providing a buffer to offload database requests.

TTL with Jitter

When caching multiple items, if all items have the same fixed TTL, they will expire simultaneously after that specific duration. This can lead to a “cache stampede” or “thundering herd,” where all the expired items are requested to be refreshed from database at the same time. Such a sudden surge in requests can cause high load and strain on the database.

To mitigate this problem, TTL with Jitter technique (TTL = Initial TTL value + jitter) introduces randomness in TTL values of individual cached items. Instead of having the same fixed TTL for all items, a small random delta or offset is added to each item’s TTL. This effectively spreads out the expiration times of the cached items, so they will not expire simultaneously.

Critical ideas to think!

- What are various use cases of cache control headers?

- How can we determine the optimal TTL value for different types of data in cache?

- How can we evaluate the effectiveness of expiration policy by monitoring cache hit and miss rates?

- How do you determine the appropriate range for TTL jitter?

- How do you handle the scenario where an item’s TTL expires, but application doesn’t immediately refresh the cache with fresh data from main data source?

- How do you address scenarios where cached items have different rates of change in database?

- How do you handle cases where main data source experiences significant delays or downtime, and application is unable to fetch fresh data to update the cache?

Cache Replacement Policy

What will happen when cache is full? We should implement cache replacement policies in the application code to handle this situation. The goal is to evict some existing cache entries to make space for new ones. There are two common cache replacement policies:

- LRU (Least Recently Used) Eviction: Least recently accessed items are removed from the cache when it reaches its capacity. If an item hasn’t been accessed for a specified period, it will likely be among the least recently used items (a candidate for eviction).

- LFU (Least Frequently Used) Eviction: Least frequently accessed items are removed from the cache. If an item has not been accessed for a specified period, its frequency of access will be low (a candidate for eviction).

Priming the cache

Instead of waiting for actual data requests from application to trigger caching, some applications proactively load the cache with relevant data beforehand. This is an idea of cache priming: Populating the cache with data that an application is expected to require during its startup or initialization process.

One of the primary reasons for priming the cache is to quickly speed up the system. The reason is simple: When actual requests arrive, there is a higher chance that the requested data is already in the cache. So in scenarios with heavy read traffic, this will reduce initial read latency and initial load on the database.

The effectiveness of cache priming depends on understanding the data access patterns and choosing the right data to preload into the cache. Over-priming or preloading unnecessary data can lead to wasted cache space and reduced overall cache performance.

Please write in the message below if you want to share some feedback or if you want to share more insight. Enjoy learning, Enjoy system design!

Share Your Insights

More from EnjoyAlgorithms

Self-paced Courses and Blogs

Coding Interview

OOP Concepts

Our Newsletter

Subscribe to get well designed content on data structure and algorithms, machine learning, system design, object orientd programming and math.

©2023 Code Algorithms Pvt. Ltd.

All rights reserved.