API Rate Limiter System Design

What is a rate limiter?

A rate limiter restricts the number of events that a certain user or device can perform within a given time range. In other words, a rate limiter limits the number of requests a sender can send in a particular period. So it is implemented as a protective mechanism to prevent excessive use of services. Once the upper limit is reached, the rate limiter blocks further requests.

In this blog, we will discuss components and algorithms related to the design of a rate limiter.

Why rate limiter?

- Ensure that servers are not overburdened. So using a rate restriction per user ensures fair and reasonable use of resources like CPU, memory, network bandwidth, database connections, etc.

- Helps a system to maintain a consistent performance and remain responsive even during peak loads.

- Avoid resource starvation due to a Denial of Service (DoS) attack.

- In cloud-based systems or services where usage is billed based on resource consumption, rate limiting can help us control costs by preventing excessive usage.

Requirements

It’s critical to ask questions and clarify needs and restrictions with the interviewer during a system-design interview. Here are a few examples:

- Is the rate limiter configured to throttle API requests based on IP, user ID, or other parameters?

- What is the overall size of the system?

- Is the rate limiter on the client or the server-side API?

High-level design

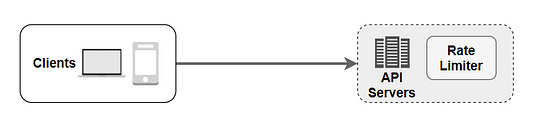

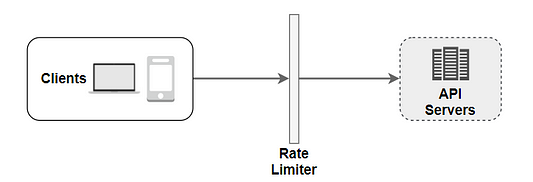

Let’s keep things simple and communicate using a basic client-server approach. Here is a critical question: where should the rate limiter be placed? One can implement a rate limiter on either the client or server side.

- Client-side implementation: Hostile actors can quickly falsify client requests. So the client is an unstable venue to impose rate restrictions. Furthermore, we may not have complete control over client implementation.

- Server-side implementation: On the server side, a rate restriction is shown in the diagram below.

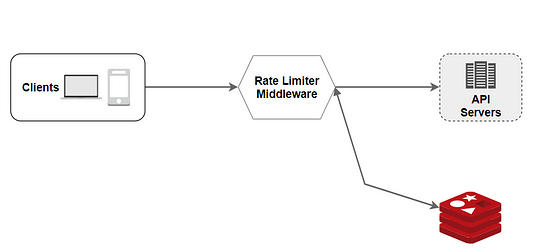

There is an alternative to the client and server-side implementations. We construct a rate limiter middleware, which throttles requests to your APIs, rather than establishing a rate limiter on the API servers.

The underlying concept of rate-limiting algorithms is simple. We need a counter at the highest level to track how many requests are submitted from the same user, IP address, etc. The request is rejected if the counter exceeds the limit.

Where should we keep the counters? Due to the slowness of disc access, using the database is not a smart option. So we can use an in-memory cache to make it quick and support a time-based expiration technique. For example, Redis is a popular choice for rate-limiting, where “INCR” and “EXPIRE” are two commands that can be used to access in-memory storage.

- INCR: Used to increase the stored counter by 1.

- EXPIRE: Used to set a timeout for the counter. The counter is automatically deleted when the timeout expires.

- A request is sent to rate limiting middleware by the client.

- The rate-limiting middleware retrieves the counter from the associated Redis bucket and determines whether or not the limit has been reached.

- The request is refused if the limit is reached.

- The request is forwarded to API servers if the limit is not reached.

- In the meantime, the system adds to the counter and saves it to Redis.

Rate limiting algorithms

Now, let’s explore several rate-limiting algorithms available for implementing API rate limiting. Suppose we need to create an API Rate Limiter that limits API calls based on the user, where one user can send a fixed number of requests in a fixed time range. Based on the use case, we can change the implementation according to our criteria.

Token Bucket Algorithm

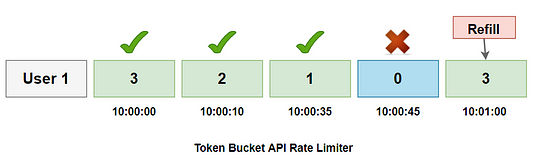

The idea of the token bucket algorithm works by maintaining a bucket with a fixed capacity of tokens. Here, tokens are added to the bucket at a constant rate, and each token represents permission to perform a certain action (such as making an API request).

When an API request comes in, the system checks if there are tokens available in the bucket. If there is at least one token available, the system removes one token from the bucket and processes the request. If there are no tokens available, the request is either rejected or delayed until tokens are available.

The token bucket gets refreshed or refilled after a specified time interval, which determines how quickly the bucket refills with tokens. For example, if a user is allocated 10 tokens per minute, they can make up to 10 requests per minute. Once the user uses up all their tokens within the time interval, they will be denied further requests until the bucket is refilled at the next interval.

- Suppose there is a limit of 3 requests per minute per user.

- The user makes the first request at 10:00:00, and the available token is 3. So the request is approved, and the available token count is reduced to 2.

- Now, the user’s 2nd request arrives at 10:00:10, and the available token is 2. So the request is permitted, and the token count is decremented to 1.

- The 3rd request arrives at 10:00:35, with a token count of 1 available, so the request is granted, and the token count is decremented to 0.

- The 4th request arrives at 10:00:45, and the available count is already 0. So the API access is denied.

- Now, at 10:01:00, the count will be refreshed with the available token count of 3.

Pros: Memory friendly and simple to use. Cons: A race condition counter may cause an issue in a distributed system due to concurrent requests from the same user.

You can explore the sample implementation for the token bucket using this link.

Leaky Bucket Algorithm

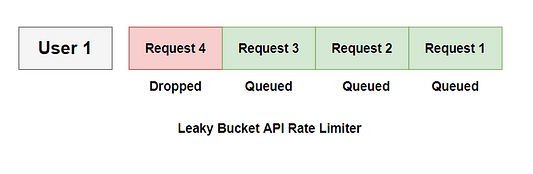

We can understand the leaky bucket algorithm idea using this analogy: Suppose there is a bucket with a hole in the bottom. Water (API requests) flows into the bucket (queue), but it can only drain out through the hole at a fixed rate. If water flows into the bucket faster than it drains out, eventually, it will overflow.

So, this algorithm uses a queue, which can be thought as a bucket that holds the requests. This queue is used to control that flow: requests are entered into the queue as they arrive (analogous to water being added to the bucket). These requests are then removed from the queue (first come, first served) at a fixed rate (comparable to water leaking from the bucket). If the queue is full (or leaked), any incoming request is rejected until there is space in the queue. Overall, this algorithm ensures that output is processed at a fixed rate.

If 3 requests/user is allowed and the queue size is 3, the API Rate Limiter will block the 4th Request.

Fixed Window Counter

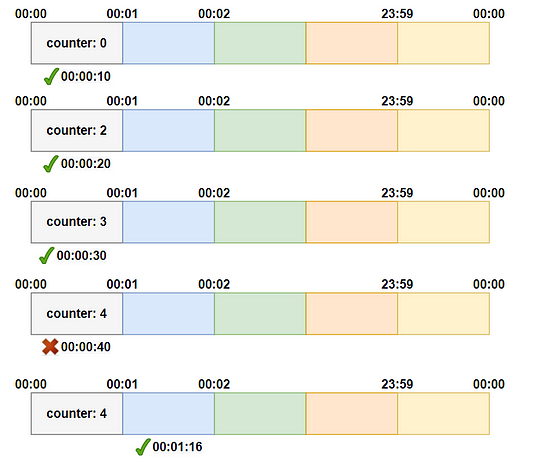

In this algorithm, we will keep a bucket per unit time window and a counter to track the number of requests made in that time window. What is the meaning of the time window here? Suppose you have a fixed time window of 1 minute, it means that the rate limit applies within each minute interval.

Here we also set a threshold for the number of requests allowed within each time window. When a request is made, the algorithm increments the counter. Once the value of the counter reaches the value of the threshold, further requests are either delayed or rejected, until the start of the next time window when the counter resets.

In the above diagram, a new request is seen at 00:00:10. So we get the count from the current bucket 00:00-00:01 (which is 0) and check if processing one more request exceeds the permissible limit of 3. If not (which is the case here), the bucket's count is incremented by 1 and the request is allowed to be processed. When a request arrives at 00:00:40 and the window count is 3, no request is authorised and an exception is triggered.

Space complexity: O(1) for storing the count for the current window. Time complexity: O(1) for get and simple atomic increment operation.

Pros:

- Easy to put into action.

- Smaller memory footprint, because all that’s done is store the counts.

- Can leverage Redis-like technology with built-in concurrency.

Sliding Window Logs

The algorithm maintains a log of request timestamps. This log can be implemented using a data structure like a queue or a circular buffer. One of the best ideas is to store these timestamps in a cache for faster retrieval and manipulation of the data.

- When a new request arrives, algorithm checks the timestamps in the log. Any timestamps older than the start of the current time window (i.e., timestamps that fall outside the defined time interval) are outdated and are removed from the log. This ensures that the log only contains timestamps relevant to the current time window.

- Once outdated timestamps have been cleared, the timestamp of the new request is added to the log. This effectively updates the log with the latest event.

- After updating the log with the new timestamp, the algorithm checks the size of the log against a predefined threshold (number of allowed requests within the defined time window). If the log size is equal to or less than this allowed count, the incoming request is accepted. Otherwise, if the log size exceeds the allowed count, the new request is dropped or rejected.

For example, suppose the rate limiter allows 2 requests per minute, and the first request arrives at 1:00:00. Now, the second request arriving at 1:00:20 is allowed because the log has a size of 2, and it falls within the time range of 1:00:00 - 1:01:00. Log = [1:00:00, 1:00:20].

- The third request arriving at 1:00:45 is rejected because the log size is 3, exceeding the allowed size of 2. The timestamp of this rejected request is still maintained in the log. Log = [1:00:00, 1:00:20, 1:00:45].

- Subsequently, the 4th request arrives at 1:01:25. This request is allowed because outdated requests (1:00:00 and 1:00:20) are removed from the log, reducing its size to 2. Log = [1:00:45, 1:01:25].

- However, the 5th and 6th requests, arriving at 1:01:35 and 1:01:40 respectively, are rejected because the log size exceeds 2. Nevertheless, they are maintained in the log since they are within the 1-minute window. Log = [1:00:45, 1:01:25, 1:01:35, 1:01:40].

- The 7th request arrives at 1:02:30. Outdated requests (1:00:45 and 1:01:25) are removed, yet this request is rejected due to the log size being 3, still exceeding the limit of 2. However, like the previously rejected requests, it is maintained in the log as it falls within the 1-minute window. Log = [1:01:35, 1:01:40, 1:02:30].

Space complexity: O(Max requests seen in a window time). In a time window, all of the requests’ timestamps are saved.

Time complexity: O(Max requests seen in a window time) — A portion of timestamps is deleted.

Pros: It works flawlessly

Cons:

- Memory usage is high. To manage numerous users or huge window times, all of the request timestamps must be kept for a window time, which necessitates a lot of memory.

- Removing earlier timestamps has high time complexity.

Sliding Window Counters

The low processing cost of the fixed window algorithm is combined with the increased boundary constraints of the sliding log in this hybrid approach. To begin, we track a counter for each fixed window, similar to the fixed window technique. To reduce surges of traffic, we account for a weighted value of the previous window’s request rate based on the current date.

We keep track of each user’s request counts by using various fixed time windows, such as 1/60th the size of our rate limit’s time frame. If we have a minute rate limit, for example, we can record a count for each second and calculate the sum of all counters in the previous minute when we get a new request to determine the throttling limit.

- Remove any counters that are more than one minute old.

- If a request is received in the current bucket, the counter will be increased.

- If the current bucket has reached its throat limit, the request will be blocked.

Space Complexity: O(number of buckets).

Time Complexity: O(1).

Pros:

- Because only the counts are saved, there is no big memory footprint.

Cons:

- Only works for non-strict look-back window times, such as smaller unit times.

References

- Rate-limiting strategies and techniques — Google Cloud

- How we built rate-limiting capable of scaling to millions of domains

Thanks to Navtosh Kumar for his contribution to creating the first draft of content. Please write in the message below if you find anything incorrect, or if you want to share more insight. Enjoy learning, Enjoy algorithms!

Share Your Insights

More from EnjoyAlgorithms

Self-paced Courses and Blogs

Coding Interview

OOP Concepts

Our Newsletter

Subscribe to get well designed content on data structure and algorithms, machine learning, system design, object orientd programming and math.

©2023 Code Algorithms Pvt. Ltd.

All rights reserved.