Fundamentals of MapReduce

What is MapReduce?

MapReduce is a batch processing programming paradigm that enables massive scalability across a large number of servers in a Hadoop cluster. It was developed by Google in 2004 and is known for its ability to handle large amounts of data on commodity hardware.

- MapReduce processes large amounts of data in a parallel and distributed manner by breaking it down into smaller pieces that can be processed concurrently. The results of these individual processing tasks are then combined to produce the final result.

- So MapReduce consists of two main phases: the map phase and the reduce phase. In the map phase, the input data is split into smaller chunks and processed in parallel by different nodes in a cluster. The output of the map phase is a set of intermediate key/value pairs. In the reduce phase, these intermediate key/value pairs are processed and combined to produce the final output.

MapReduce Dataflow

By dividing the data processing workload into smaller pieces and distributing it across multiple nodes, MapReduce is able to handle large amounts of data efficiently and quickly. This approach is particularly useful for analyzing and processing data sets that are too large to be handled by a single machine.

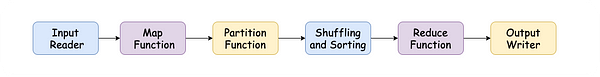

MapReduce consists of a series of steps that are performed to transform and analyze data. These steps include:

- Input reader: This component reads the incoming data, splits it into data blocks, and associates each data block with a Map function. It reads files stored in Hadoop Distributed File System (HDFS) and generates corresponding key-value pairs.

- Map function: This function takes a series of key/value pairs, processes each pair, and generates zero or more output key/value pairs. The input and output types may be different from each other.

- Partition function: This function assigns the output of each Map function to the appropriate reducer for sharding purposes and returns the reducer's index.

- Shuffling and sorting: The data produced by the Map function is shuffled between and within nodes, and then sorted using a comparison function.

- Reduce function: For each unique key stored in sorted order, the framework calls the Reduce function. The values associated with the keys can be iterated by the Reduce function and used to generate the corresponding output.

- Output writer: This component writes the output of the Reduce function to storage.

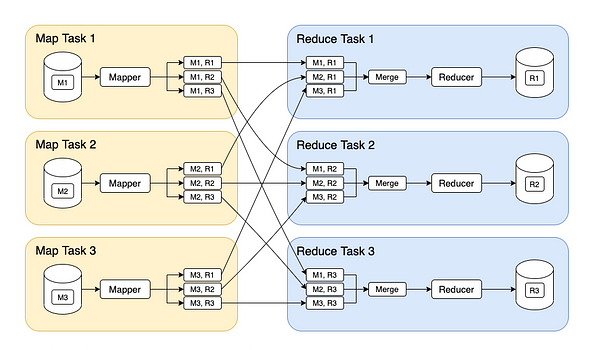

The above figure shows the data flow in a Hadoop MapReduce job. In a Hadoop MapReduce job, the input data is partitioned into separate files stored in a directory in HDFS. Each of these files is processed by a separate map task, labeled M1, M2, and M3 in the figure. The MapReduce scheduler attempts to run each mapper on a machine that has a replica of the input file, which can be hundreds of megabytes in size. The mapper reads the input file, one record at a time, and produces key-value pairs as output.

The number of map tasks is determined by the number of input file blocks, while the number of reduce tasks is decided by the job author. The MapReduce framework uses a hash of the key to ensure that all key-value pairs with the same key are sent to the same reducer. The key-value pairs must be sorted, and this is done in stages where each map task partitions its output based on the key's hash and writes it to a sorted file on the mapper's local disk.

When a mapper has completed its job, the MapReduce scheduler informs the reducers that they can begin fetching the output files from the mapper. The reducers connect to the mappers, retrieve the files, and merge them while preserving the sort order. The reducer is called with a key and an iterator that progressively reads all records with the same key. The reducer processes these records and generates output records that are written to a file on the distributed filesystem.

How does MapReduce Work?

MapReduce operates by dividing a job into two tasks, which are managed by two types of entities: the Jobtracker and multiple Task Trackers. The Jobtracker acts as a master and resides on the Namenode, while the Task Trackers act as slaves and reside on the Datanode.

A job is divided into multiple tasks, which are then run on multiple data nodes in a cluster. The Task Tracker is responsible for the execution of individual tasks and sends progress reports to the Jobtracker. It also periodically sends a heartbeat signal to the Jobtracker to update it on the current state of the system. If a task fails, the Jobtracker can reschedule it on a different Task Tracker.

Example

MapReduce can be used to count the number of words in a text file. It does this by reading the text file and counting the frequency of each word. The input and output for this process are both text files. Each mapper takes a line of the input file as input and breaks it into words. It produces a key/value pair for each word, with the word itself being the key. The reducer receives a list of all the key-value pairs for each word and sums the counts for each word. It then outputs a single key-value pair for each word, with the word itself as the key and the sum of its counts as the value.

The code for the mapper is stored in mapper.py

mapper.py

import sys

for line in sys.stdin:

line = line.strip() # remove whitespace(leading/trailing)

words = line.split() # split the line into words

for word in words:

print ('%s\t%s' % (word, 1))The code for the reducer is stored in reducer.py

reducer.py

import sys

cur_word = None

cur_count = 0

word = None

for line in sys.stdin:

line = line.strip() # remove whitespace(leading/trailing)

word, count = line.split('\t', 1) # parse the mapper.py

if cur_word == word:

cur_count += count

else:

if cur_word:

print ('%s\t%s' % (cur_word, cur_count))

cur_count = count

cur_word = word

if cur_word == word:

print ('%s\t%s' % (cur_word, cur_count))The above program can be run using cat word_count.txt | python mapper.py | sort -k1,1 | python reducer.py

Use cases of MapReduce

MapReduce is a powerful tool that is used in a variety of applications, including distributed pattern-based searching, distributed sorting, and web link-graph reversal. It is also used in many different computing environments, such as multi-core systems, desktop grids, multi-cluster systems, volunteer computing environments, dynamic cloud environments, mobile environments, and high-performance computing environments.

One notable use of MapReduce is in regenerating Google's index of the World Wide Web. It replaced the old programs that were used to update the index and run various analyses.

Conclusion

MapReduce is a powerful tool for processing large amounts of data in the big data paradigm. It allows businesses to efficiently process petabytes of data stored in HDFS, providing more accessible access to multiple data sources and data types. It also enables fast processing of massive amounts of data through parallel processing and minimal data movement.

In addition, MapReduce programming offers several other benefits. It is easy to use and allows developers to write code in a variety of languages, including Java, C++, and Python. This makes it a popular choice for many businesses and organizations.

Enjoy learning, Enjoy algorithms, Enjoy system design!

Share Your Insights

More from EnjoyAlgorithms

Self-paced Courses and Blogs

Coding Interview

OOP Concepts

Our Newsletter

Subscribe to get well designed content on data structure and algorithms, machine learning, system design, object orientd programming and math.

©2023 Code Algorithms Pvt. Ltd.

All rights reserved.