Process Management in Operating System (OS)

Process management is one of the critical concepts used in operating systems that involves activities related to managing and controlling processes. So before looking into process management and how it works, let's start with the definition of a process. In simple words, a program in execution is called a process. It is an instance of a program that runs, i.e., an entity that can be assigned and executed on a processor. There are two essential elements of a process: program code and data associated with that code.

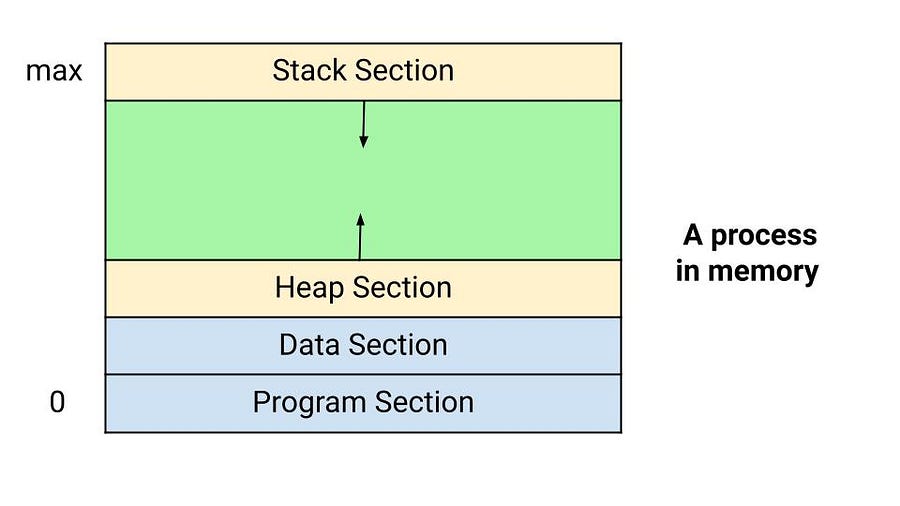

Process memory is divided into four sections:

- Program memory stores the compiled program code.

- The data section stores global and static variables.

- The heap section manages dynamic memory allocation inside the program. It is the portion of memory where dynamically allocated memory resides i.e., memory allocation via new or malloc and memory deallocation via delete or free, etc.

- The stack section stores local variables defined inside the program. Stack space is created for local variables when declared, and space is freed up when they go out of scope.

Note: Stack and heap sections start at opposite ends of the process free space and grow towards each other. When they meet, a stack overflow error will occur or a call to new memory allocation will fail due to insufficient memory available in the heap section.

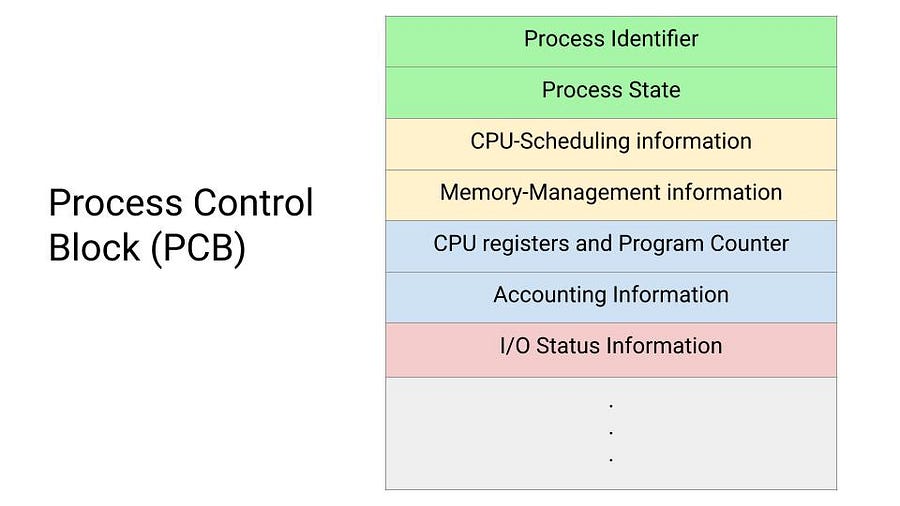

Process Control Block (PCB)

A program executing as a process is uniquely determined by various parameters. These parameters are stored in a Process Control Block (PCB) i.e. a data structure which holds the following information:

- Process ID: Unique ID associated with every process.

- Process state: A process can be in a start, ready, running, wait or terminated state.

- CPU scheduling information: Related to priority level relative to the other processes and pointers to scheduling queues.

- CPU registers and program counter: Need to be saved and restored when swapping processes in and out of the CPU.

- I/O status information: Includes I/O requests, I/O devices (e.g., disk drives) assigned to the process, and a list of files used by the process.

- Memory management information: Page tables or segment tables.

- Accounting information: User and kernel CPU time consumed, account numbers, limits, etc.

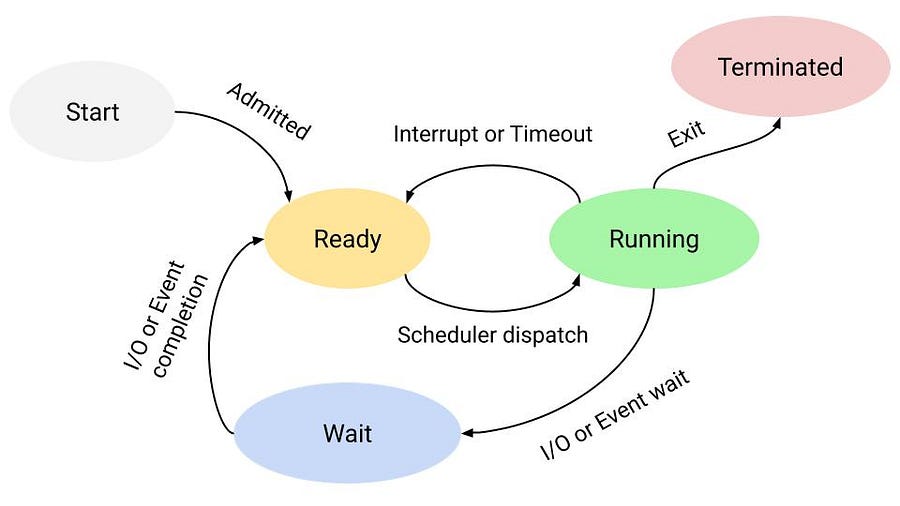

States of a Process in Operating System

Now, we understood the process, where we defined one parameter of a process called Process State. Processes in the operating system can be in any of the five states: start, ready, running, wait, and terminated.

Let's understand these states and the transition of a process from one state to another state:

- Null to Start: When a new process is created, a process transitions from the NULL to the Start state.

- Start to Ready: The OS moves a process from the Start state to the Ready state when it is prepared to execute an additional process. At this stage, the process has all the resources available that it needs to run and is waiting to receive CPU time for its execution.

- Ready to Running: The OS chooses one of the processes from the Ready state using the process scheduler or dispatcher. After this, the CPU starts executing the selected process in the running state.

- Running to Terminated: The running process is terminated by the OS when it has been completed.

- Running to Ready: The scheduler sets a specific time limit for executing any active process. If the current process takes more time than specified by the scheduler, it is moved back to the ready state. The most critical reason for this transition is that it has reached its maximum permissible period for uninterrupted execution. Almost all multiprogramming operating systems enforce this time constraint.

- Running to Wait: If a process requires anything for which it must wait, it is placed in the waiting state. Now, the process cannot run because it is waiting for some resource to become available or for some event to occur. For example, the process may be waiting for keyboard input, a disk access request, inter-process messages, a timer to go off, or a child process to finish.

- Wait to Ready: A process in the Waiting state is transferred to the Ready state when the event for which it has been waiting is completed.

Note: Some operating systems may have other states besides the ones listed here.

Execution of a process in Operating System

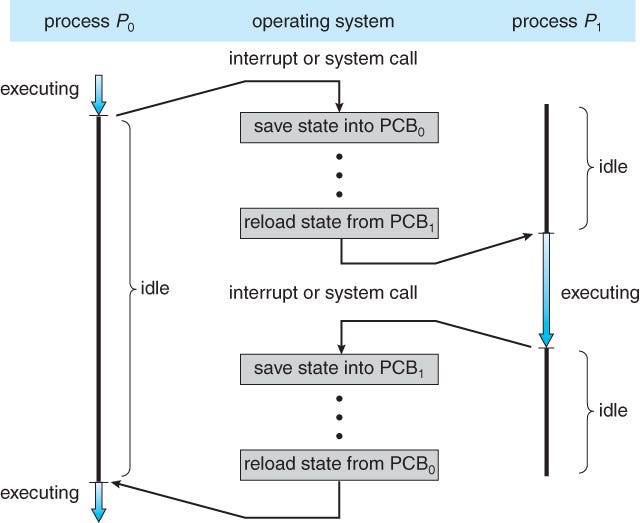

The operating system uses a process control block (PCB) to track the execution status of each process.

- To keep track of all processes, it assigns a process ID (PID) to each process to identify it uniquely. As discussed above, it also stores several other critical details in the PCB.

- The OS updates information in the PCB as the process makes a transition from one state to another.

- To access PCB frequently, the operating system keeps pointers to each PCB in a process table.

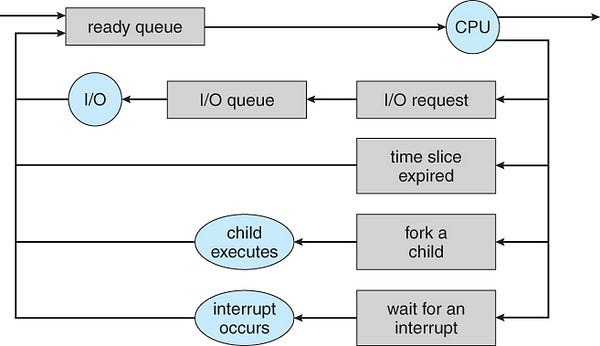

Process Schedulers in Operating System

Process scheduling is critical for selecting and removing running processes based on a particular algorithm. Here one of the key objectives is to keep the CPU busy and deliver "acceptable" response times for all programs. Note: Multiprogramming operating systems allow more than one process to be loaded into the executable memory at a time, and the loaded process shares CPU using time multiplexing.

The operating system has three types of process schedulers:

Long-Term or Job Scheduler: This scheduler's job is to bring new processes to the Ready state. It determines which process is assigned to the CPU for processing, selects processes from the queue, and loads them into memory for execution. The primary objective of the job scheduler is to provide a balanced mix of jobs (such as I/O bound and processor bound) and control the degree of multiprogramming.

Short-Term or CPU Scheduler: It is in charge of selecting one process from the ready state and scheduling it to the running state. They are also known as Dispatchers.

- CPU scheduler is responsible for ensuring no starvation due to high burst time processes.

- It runs frequently and quickly swaps one process from the running state to the ready state.

- Medium Term Scheduler: It is in charge of swapping processes when a particular process is performing an I/O operation. If a running process makes an I/O request, it may be suspended. The suspended process is transferred to secondary storage to remove it from memory and make room for other processes. This is known as switching.

CPU Scheduling in Operating System

Now our aim would be to understand CPU Scheduling concept and why we need it.

- Both I/O and CPU time is used in a typical procedure. Time spent waiting for I/O in an old operating system like MS-DOS is wasted, and CPU is free during this time. In multiprogramming modern operating systems, one process can use CPU while another waits for I/O.

- CPU scheduling determines which process will exclusively use CPU while another is paused. The goal is to ensure that whenever CPU is idle, OS chooses at least one of the programs in the ready queue to run.

Types of CPU Scheduling

There are two major types of CPU Scheduling:

- Preemptive Scheduling: In this scheduling, processes are assigned with their priorities and currently running processes can be interrupted by the operating system so that another process with a higher priority can run. So lower priority process is put on hold for a while and resumes when the higher priority process is completed. This is used in real-time operating systems where responsiveness is critical.

- Non-Preemptive Scheduling: In this scheduling, a running process cannot be interrupted until it has completed its execution or voluntarily relinquishes control of the CPU. So in this approach, once a process starts running, it continues to run until it finishes or blocks (e.g., waiting for I/O). Non-preemptive scheduling is simpler to implement and may lead to less overhead compared to preemptive scheduling, but it may not be good for systems where responsiveness or prioritization of tasks is crucial.

Differences between Preemptive and Non-Preemptive Scheduling

- In pre-emptive scheduling, memory allocated in the main memory is for a limited time. It may be assigned to any other process depending on the state of the current process or priority of the new incoming process. In non-Preemptive scheduling, memory is allocated to the same process until it is completed.

- In preemptive scheduling, if high-priority processes keep on coming, a low-priority process can be interrupted for an indefinite time. In non-preemptive scheduling, if any large process is processing, it will keep on processing and will not allow even a small process to process before it completes execution.

- In preemptive scheduling, it has to save all data of the process in a halted state so that it can continue from that point only, whereas no such requirements exist in non-preemptive scheduling.

Different Scheduling Algorithms in Operating System

Now, we will look at the various scheduling algorithms involved in process management one by one.

First Come First Serve (FCFS) Scheduling Algorithm

It is the most basic CPU scheduling algorithm, where a FIFO queue is used to manage the scheduling strategy. The idea is simple: a process that asks first to get the CPU allocation, get access to the CPU first.

PCB of the process is linked to the tail of the queue as it enters the ready queue. As a result, whenever a CPU becomes available, it is assigned to the process at the front of the queue. Some important points:

- Offers both non-preemptive and pre-emptive scheduling.

- Jobs are always executed on a first-come first-serve basis.

- Easy to use and implement.

- Poor performance and overall wait time are quite high.

A simple example: Suppose we have five processes p1, p2, p3, p4, and p5, and the ready queue receives them at times t1, t2, t3, t4, and t5 such that t1 < t2 < t3 < t4 < t5. So p1 arrived first in the ready queue, so it will be executed first, followed by p2, p3, p4, and p5, respectively.

Convoy Effect

In First Come First Serve algorithm, if a process with a large CPU burst time arrives before any small process, then the small process will get blocked by that large process, which is called Convoy Effect.

Shortest Job First(SJF) Scheduling Algorithm

SJF algorithm is a non-preemptive scheduling algorithm. This policy prioritises waiting process with the shortest execution time. Among all scheduling algorithms, Shortest Job First has the advantage of having the shortest average waiting time. It first creates a pool of processes and then chooses the process with the shortest burst times. After completing the process, it again selects the process with the shortest burst time from the pool. Some important points:

- Short processes are handled very quickly.

- When a new process is added, algorithm just has to compare the currently running process to the new one, ignoring any other processes waiting to run.

- Long processes can be postponed indefinitely if short processes are added regularly.

It is further categorized into two types:

- Preemptive priority scheduling: Due to the arrival of a smaller CPU burst time process, the current process is sent to halted state and execution of the new process proceeds.

- Non-preemptive priority scheduling: The current process is not disturbed due to the arrival of a smaller CPU bursts time process.

A simple example: Suppose we have five processes p1, p2, p3, p4, and p5, and the ready queue receives them at times t1, t2, t3, t4, and t5 such that t1 < t2 < t3 < t4 < t5. Now, you can assume the ready queue as the priority queue, which rearranges the incoming process based on CPU bursts time. Therefore, process with the least CPU burst time is delivered first, and so on.

Longest Job First Scheduling Algorithm

Longest Job First (LJF) is non-preemptive scheduling. This algorithm keeps track of the burst time of all processes accessible at the moment of arrival and then assigns processor to the process with the longest burst time. In this algorithm, once a process begins to run, it cannot be halted in the middle of its execution.

It organise processes in ascending order of their arrival time. Then, out of all processes that have arrived up to that point, it will choose one with the longest burst time. After that, it will process it throughout the duration of the burst. Until this process completes its execution, LJF monitors if any more processes arrive.

Some important points:

- The average waiting time and turn-around time for a particular set of processes can increase.

- A process with a short burst time may never get executed while system continues to run large processes.

- Reduces processing speed, resulting in a decrease in system efficiency.

A simple example: Similar to the above example, you can assume here that the same ready queue prioritises based on a larger CPU burst time i.e. out of those five processes, the one with the largest CPU burst time will be executed first.

Round Robin scheduling Algorithm

In Round Robin scheduling each process is cyclically assigned a set time slot. This looks similar to FCFS scheduling, except that it includes preemption, which allows system to transition between processes. In other words, round robin strategy was created with time-sharing systems in mind.

Each process has a set amount of time assigned to it, and once that time period has passed, process is preempted and another process takes its place. As a result, all processes get an equal amount of CPU time. This algorithm performs best in terms of average response time.

Some important points:

- Round robin is a pre-emptive algorithm similar to FCFS with some improvements.

- CPU is shifted to the next process after a fixed interval time.

- Widely used scheduling method in traditional OS.

A simple example: Suppose there are five processes p1, p2, p3, p4, and p5, with total execution times of t1, t2, t3, t4, and t5. Now, we have one extra factor, t (a time quanta), which will ensure equal CPU time sharing for each process. Suppose the first process p1 arrives, and after t time of execution, p1 will be preempted and wait for the remaining execution of (t1 — t) time. At this stage, p1 will move to the wait state, where it can perform its I/O operation. Now CPU is released for the next process, p2. After completing the I/O operation, process p1 is pushed to the ready queue again for its next processing cycle. The same goes for all the processes.

Priority Based Scheduling

Priority scheduling is one of the most often used priority scheduling methods. Here priority is assigned to each process, and the highest-priority process will be executed first. The processes of the same priority will be executed based on the first-come first-serve basis. Note: Priority can be determined by memory limitations, time constraints, or other resource constraints.

Some important points:

- There are chances of indefinite blocking or starvation.

- We can leverage the concept of aging to prevent starving of any process by increasing the priority of low-priority processes based on their waiting time.

It is also categorized into two types:

- Preemptive priority scheduling: Due to the incoming higher priority process, the current process is sent to halted state and execution of the new process proceeds.

- Non-preemptive priority scheduling: Due to an incoming high priority process, the current process is not disturbed.

A simple example: Similar to the example of the FCFS algorithm, the processes are inserted in the ready queue but based on a priority now, which can be CPU burst time, memory constraints, etc.

Reference: Operating System Concepts by Abraham Silberschatz, Greg Gagne, and Peter Baer Galvin

Thanks to Vishal Das for his contribution to creating the first version of the content. Please write in the message below if you find anything incorrect, or if you want to share more insight. Enjoy learning, Enjoy algorithms!

Share Your Insights

More from EnjoyAlgorithms

Self-paced Courses and Blogs

Coding Interview

OOP Concepts

Our Newsletter

Subscribe to get well designed content on data structure and algorithms, machine learning, system design, object orientd programming and math.

©2023 Code Algorithms Pvt. Ltd.

All rights reserved.