Top Misconceptions in Machine Learning

Data Science and Machine Learning are emerging as one of the hottest topics in advancing technology. Almost every company, ranging from the top tech giants like Google, Microsoft, or Apple to newly emerged startups like Uber, or Swiggy, has already started building the infrastructure for Machine Learning support.

So, no doubt that it has shown tremendous potential until now, but it also has created hype or so-called rumors. We sometimes overemphasize the capabilities of technologies, and Machine Learning is not an exception here. In this article, we will discuss the most common misconceptions which are so popular that every one of us must have come across at least once in our Machine Learning journey.

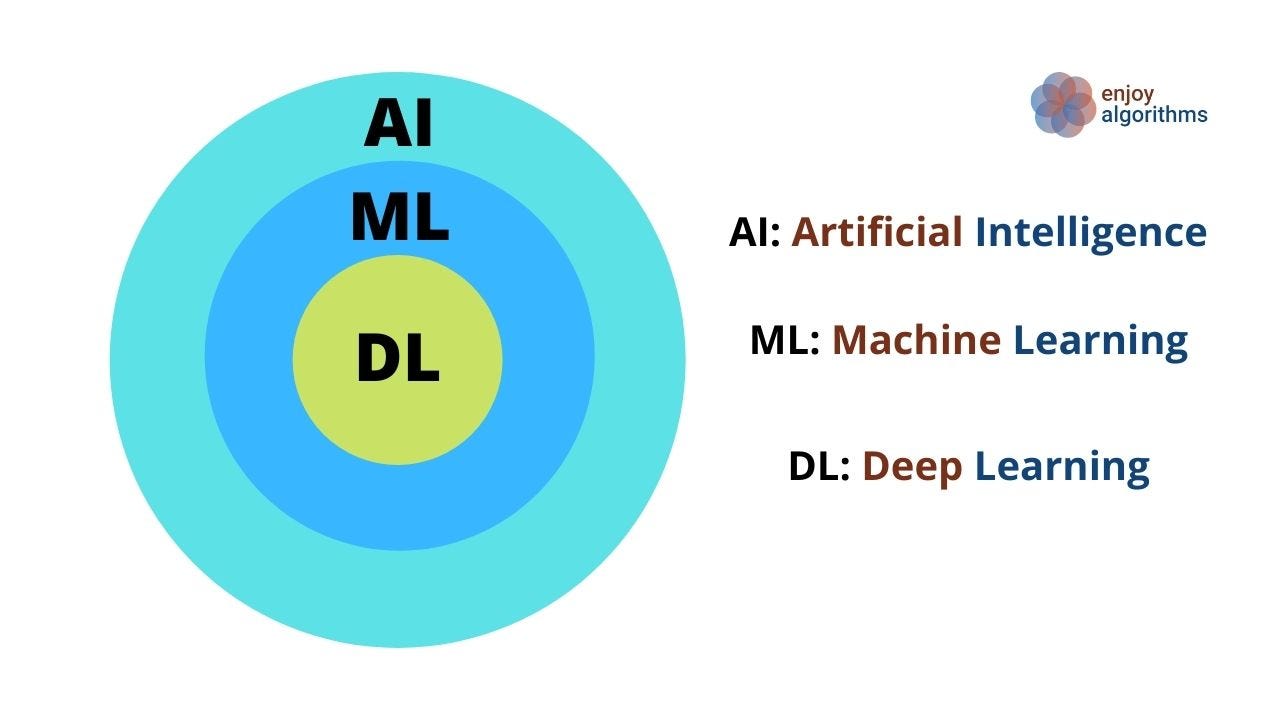

Machine Learning, Deep-Learning, and Artificial Intelligence all are the same

This is one of the most famous misconceptions that almost every early-stage learner must have heard. But in reality, Deep Learning is just a part of Machine Learning, and Machine Learning is just a part of Artificial Intelligence. This can also be represented in the below Venn diagram. The apparent difference with the example is covered here.

Machine Learning can predict future

There is no doubt that Machine Learning models can predict the future, but this statement is half-true. This always comes with an additional condition which we generally ignore. Machine Learning models can predict the future only if future events have similarities with past events. Or formally, we can say if history repeats itself.

For example, there are machine learning-based forecasting models, using which we can forecast predictions like stock price based on the previous day's prices, weather conditions based on similarity with prior dates, and production estimates based on the production of prior years. There are many more examples. But, if you ask them to predict the patterns that were not seen earlier while building the machine learning model, they will fail to predict it.

Machine Learning technology can solve any problem

Machine Learning models can not be capable of solving all the issues of this world. One of the most famous quotes about machine learning says: “A baby learns to crawl, walk and then run. We are in the crawling stage when it comes to applying machine learning.” ~Dave Waters

Algorithms developed until now are specifically designed to solve a particular problem statement. Companies like Facebook and Google have started visualizing Machine Learning from a “Non-Supervised Learning” perspective where there will be no labeling of data available, and machines will learn from themselves. They have shared strong evidence that Supervised Learning can never become the future because of the dependency on the annotation or labeling of a massive amount of dataset.

One Machine Learning algorithm will be sufficient

This is also one of the most common doubts in Machine Learning and Deep Learning. People think that one machine learning algorithm is sufficient to solve all the problems in this domain. There is the misconception that algorithms have evolved, and hence further evolvements in algorithms will have enough power to solve earlier algorithms' problems. But this doesn't seem right.

The Logistic Regression algorithm is not able to solve the regression problems. The fact that algorithms have evolved is entirely correct, but this evolution is made with a focus on solving wholly different or advanced problems. So the choice of algorithm is dependent on the problem statement that we want to solve.

With the help of Machine Learning, Robots will have human-level intelligence

This misconception is wildly used and spread via sci-fi movies. Machine Learning algorithms, which have been developed until now, cannot even make self-made decisions, and we think that robots will achieve human-level intelligence. In other words, we can definitely say that humans control it.

Many scientists are also working in Artificial General Intelligence (AGI) to make machines able to make decisions independently. When this technology is developed, we will say that humans will make super-intelligent robots shortly.

There will always be human involvement in the process of Machine Learning

This is one of the most interesting misconceptions among readers. Earlier, we said that ML had not evolved that much, but in this case, Machine learning has evolved to that point where it does not require any human interventions. Technologies have evolved to the point where Machine Learning or Deep Learning algorithms can automatically generate short news content or articles based on the data available over the internet, which requires zero intervention from humans.

So, with advanced algorithms, we can remove the human involvement altogether after training the particular machine learning model. There are some automatic videos generated with the “deep fake” technology where fake faces of any human being can be used. They look so real that some people believe those videos contain persons present in the video. So, human intervention may be reduced to zero in the case of machine learning.

More features in the data, better will be the Machine Learning model

In Deep-learning, we can say that the model's accuracy depends on the amount of data we feed to our model. But the ability of our machines, hardware, and computation power will be the biggest bottlenecks here. We will not be able to provide all the data to our machine learning model at once.

Sometimes, mining the features from the same dataset may not be helpful. Models can be overfitted or biased towards one or more features because the new extracted feature is highly correlated to the existing features.

The purpose of Machine Learning Models is to deliver high accuracy

This is also one of the most prevalent misconceptions that persist even in many advanced learners. The role of Machine Learning algorithms is to provide you with "higher modularity" rather than "higher accuracy." There may be situations where any ML algorithm will not beat the existing traditional algorithms in terms of accuracy. Still, it can certainly beat the standard algorithm if it will perform better in unexpected scenarios or if it has to consider the exceptional scenarios.

For example, suppose there is one traditional algorithm to calculate our target variable for our supervised learning model. Our machine learning model will try to reach 100% accuracy with respect to that conventional algorithm. As this will never be completely 100%, we can say our Machine Learning model will never beat the conventional approach. We must be thinking that then why ML? Because it may be possible that the overall complexity and computation power required in the conventional algorithm is higher than the machine learning models. In such a case, replacing traditional algorithms with machine learning models will be beneficial.

Conclusion

We always encourage the stage of getting surrounded by myths because it shows that you are exploring the areas. But as true learners, it should be our responsibility to know the fact of every tale in any domain.

We may have cleared most of the misconceptions that are very popular for early learners.

Enjoy Learning, Enjoy Algorithms!

Share Your Insights

More from EnjoyAlgorithms

Self-paced Courses and Blogs

Coding Interview

OOP Concepts

Our Newsletter

Subscribe to get well designed content on data structure and algorithms, machine learning, system design, object orientd programming and math.

©2023 Code Algorithms Pvt. Ltd.

All rights reserved.