Probability For Machine Learning

Data is one of the most important ingredients for building the best machine learning models. The more you know about the data, the better your machine learning model will be, as you will be able to depict the reason behind your model's performance. Probability is one of the most important mathematical tools that help in understanding data patterns.

In this article, we will not be only describing the theoretical aspects of probability but we will give you a sense of where those theoretical aspects will be used in ML.

So, let’s start without any further delay.

Originated from the “Games of Chance,” probability in itself is a branch of mathematics concerned about how likely it is that a proposition is true.

If we notice carefully, every daily-life phenomenon can only be of two types:

- Deterministic: Phenomena that will always be true. For example, picking a white ball from a bag of white balls.

- Indeterministic: Phenomena for which we are not sure. For example, if a fair dice is rolled, the probability of the number being 1 is indeterministic.

Mathematically, probability can be defined as :

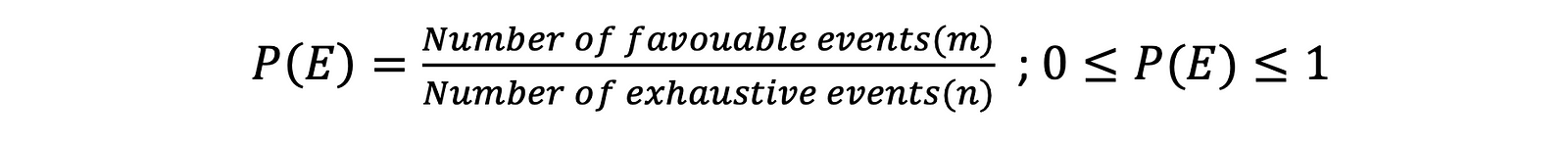

If a random experiment has n > 0 mutually exclusive, exhaustive, and equally likely events and, if out of n, m such events are favorable ( m ≥ 0 and n ≥ m), then the probability of occurrence of any event E can be defined as

Some common terms:

- Random experiment: Experiments or processes where the outcome is not predicted with certainty, like throwing a die.

- Trails and Events: The occurrence of any event in a random experiment is trial and, the outcomes of the random experiment are events. Throwing dice is a trial, and if it shows any number from 1 to 6, then that number is an event.

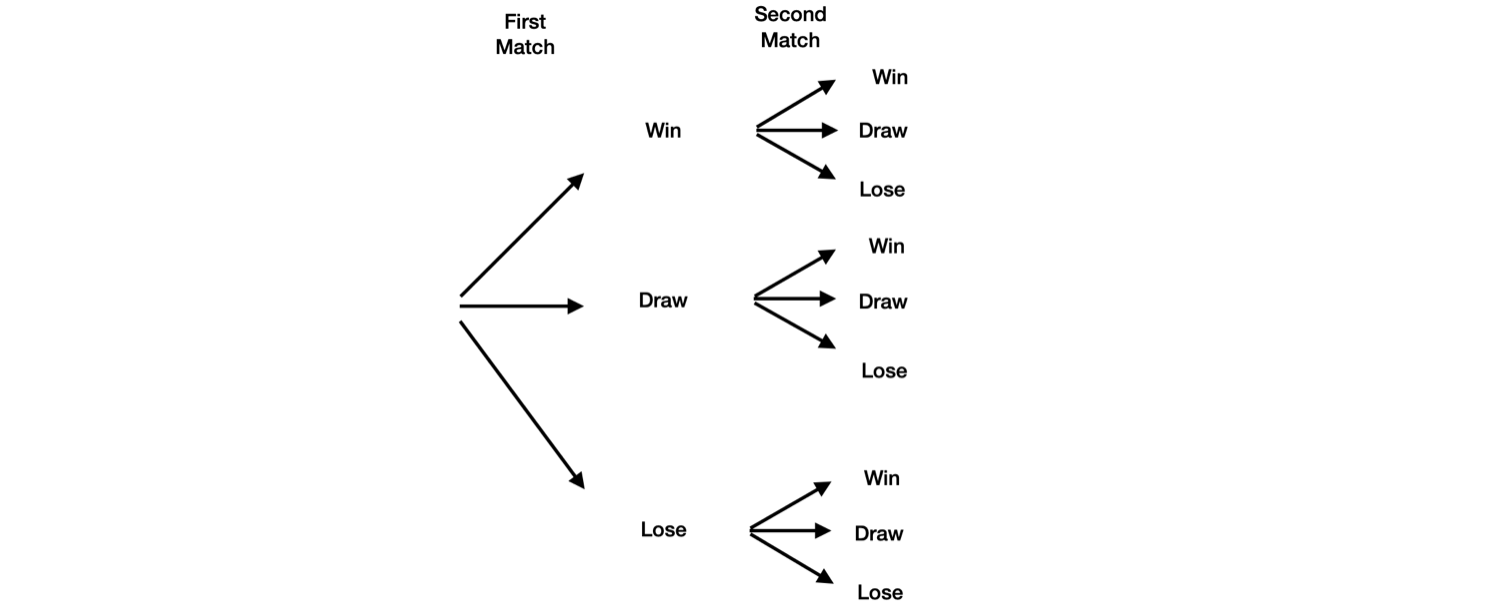

- Multiplication Rule: Ifan experiment has multiple components and1st component has K1 possible outcomes, the 2nd component has K2 possible outcomes, . . . , and the Kth component has Kr possible outcomes, then overall there are K1*K2 *. . . *Kr possibilities for the whole experiment.

- Sampling: A technique in which samples are chosen from a larger population. The sample will be considered as a probability sample if it has been chosen from a random selection.

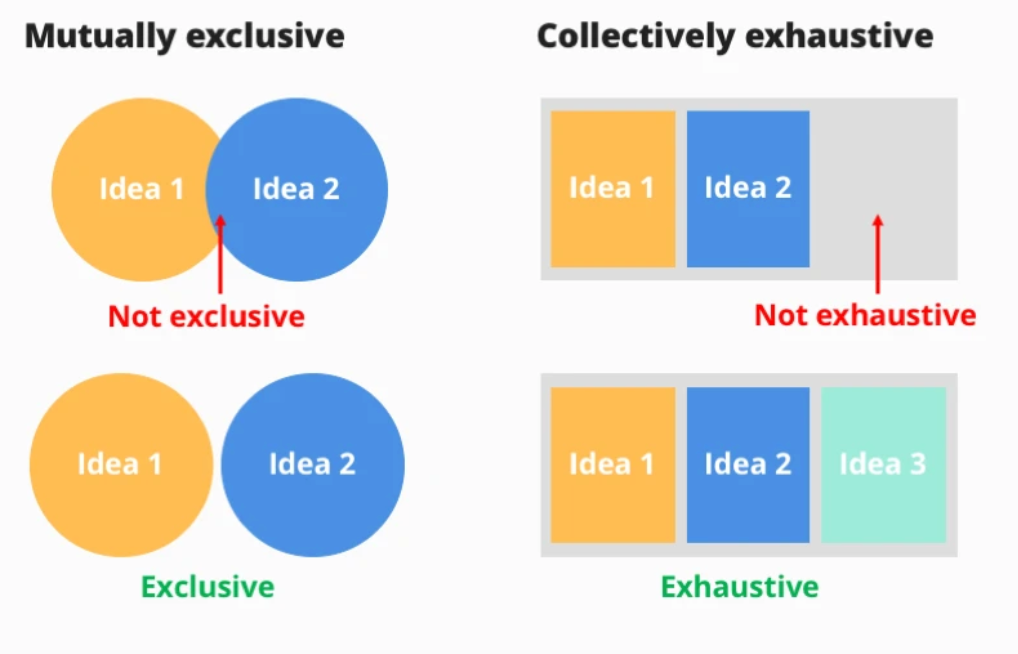

- Exhaustive Event: Total number of all possible events of a random experiment. Like the tossing of a die once has 6 exhaustive events. If the die is tossed for n number of times, there will be 6^n exhaustive events.

- Mutually Exclusive Events: Events where the probability of any event's occurrence does not depend upon the probability of occurrence of another event in the same or different trails. Like the occurrence of 5 and 6 when two dies are rolled simultaneously.

- Equally likely events: The events where one cannot expect in preference of another event in a random experiment like rolling of a fair die twice.

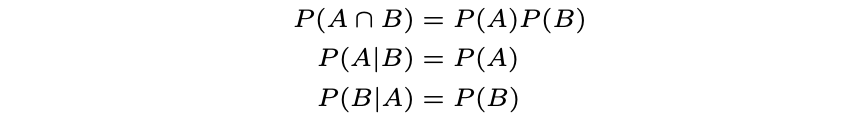

- Independent Events: The eventsA and B are said to be independent events if the probability of occurrence of A doesn’t depend upon the probability of occurrence of B. Mathematically, we can say that independent events must follow:

- Conditional Independent Events: Based on some event C, A & B events are conditional independent if, P(A Ո B | C) = P(A | C) P(B | C). Conditional independence does not imply independence, and independence does not imply conditional independence.

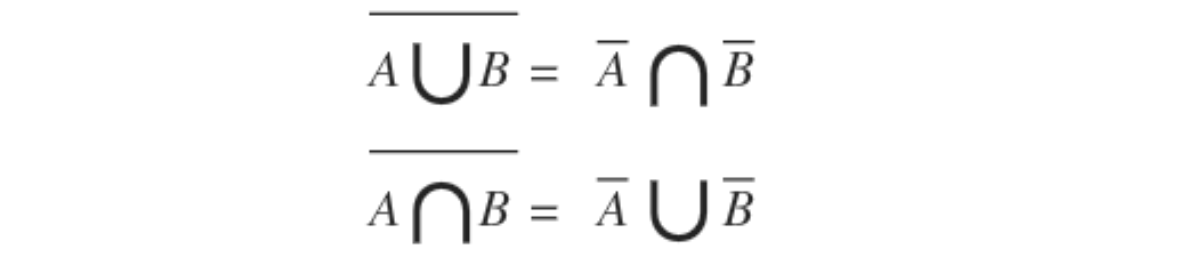

- De Morgan’s Law: A̅ is the complement of A,

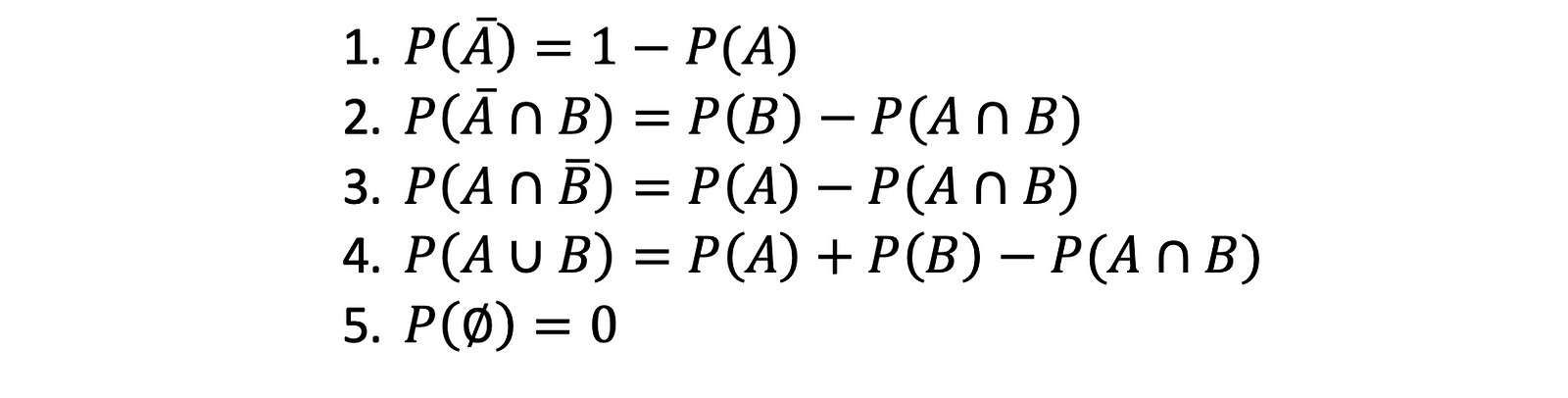

Some Basic results:

Let A and B are two events, A̅ is the complement of A, then.

Probability Under Statistical Independence:

Statistical Independence is a simple concept in probability theory that is all about the occurrence of any event.

Suppose two coins are to be tossed, then the probability of occurrences of the head or tail can be classified as:

- Marginal Probability: The simple probability of occurrence of head or tail on tossing of a coin. (Simple probabilities of occurrence of any event).

- Joint Probability: Probability of occurrence of the head with the first coin and probability of occurrence of the tail with the second coin when both the coins are tossed simultaneously. ORWhen a single coin is tossed consecutively, the probability of occurrence of the tail in the first chance and head in the second chance. (Probability of occurrence of joint events occurring together or in succession).

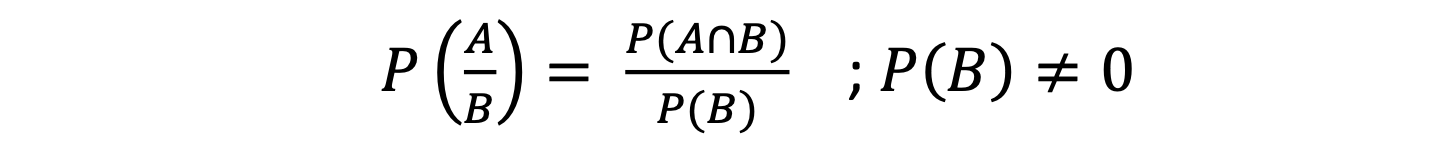

- Conditional Probability: Probability of occurrence of a head in tossing a coin when the tail has already occurred. (Probability of occurrence of any event A when B has already occurred).

Probability under Statistical Dependence:

When the probability of one event's occurrence depends on the probability of another event's occurrence, the scenario comes under statistical dependence.

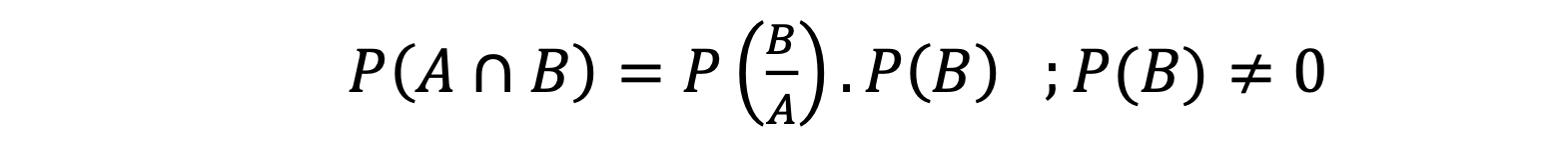

If we have two events, A and B, then:

1. Conditional Probability is the probability of occurrence of an event A if event B has already occurred.

2. Joint Probability is the measure of two or more events happening at the same time. It can only be applied to situations where more than one observation can occur simultaneously, i.e., the probability of occurrence of event B at the same time when event A occurs.

3. Marginal Probability is obtained by summing up probabilities of all the joint events in which a simple event is involved.

Law of Total Probability

If B1, B2, …, Bn are disjoint events and their union completes the entire sample space, then the probability of occurrence of an event A will be

P (A) = P (A ∩ B1) + P (A ∩ B2) + · · · + P (A ∩ Bn)

Baye’s Theorem:

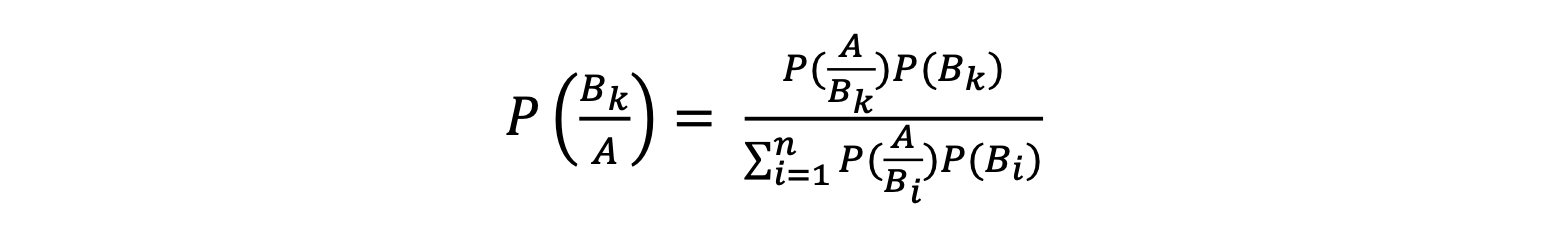

Let S be a sample space such that B1, B2, B3… Bn form the partitions of S and let A be an arbitrary event then,

𝑃(𝐵𝑖 ), 𝑖 = 1,2, …, 𝑛 are called the prior probabilities of occurrence of events.

𝑃(𝐵𝑘/A) is the posterior probability of 𝐵𝑘 when 𝐴 has already occurred.

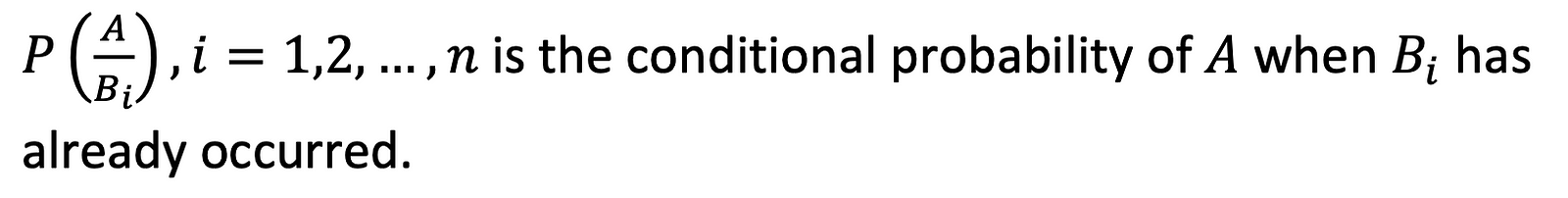

In the context of the above image,

P(chill) := Probability that you are chilling out.

P(Netflix) := Probability that you are watching Netflix.

P(chill/Netflix) := Probability that you are chilling while watching Netflix.

P(Netflix/chill) := Probability that you will watch Netflix while chilling out.

Random Variables:

Unlike algebraic variables, where the variable in an algebraic equation is unknown and calculated, random variables take on different values based on outcomes of any random experiment. It is just a rule that assigns a number to each possible outcome of an experiment.

Mathematically, a random variable is defined as a real function (X or Y or Z) of the elements of a sample space S to a measurable space E, i.e.,

𝑋∶𝑆 →𝐸

Random variables are of two types:

- Discrete random variables: one which has finite numbers of distinct values, basically as count.

Ex.- Number of times head occurs if a coin is tossed thrice. - Continuous random variables: one which is defined in range.

Ex.- Amount of sugar in 10ml orange juice.

Probability Distribution Function

Like frequency distribution, where all the observed frequencies that occurred as outcomes in a random experiment are listed, the Probability distribution listing probabilities of the events that actually occurred in a random experiment.

Probability Distribution defines the likelihood of possible values that a random variable can take.

Probability Distribution Function is of two types:

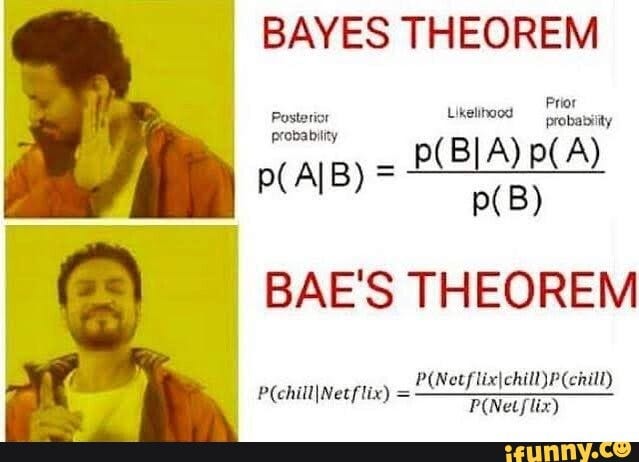

- Discrete Probability Distribution: the one with a limited number of probability values corresponding to discrete random variables.

Ex.: Occurrence of the number of heads when a fair coin is tossed thrice.

- Continuous Probability Distribution: In this distribution, the variable is allowed to take any values in the given interval.

Ex.: Probabilityof picking a real number between 0 and 1.

Functions related to Probability Distribution:

For Discrete Probability Distribution

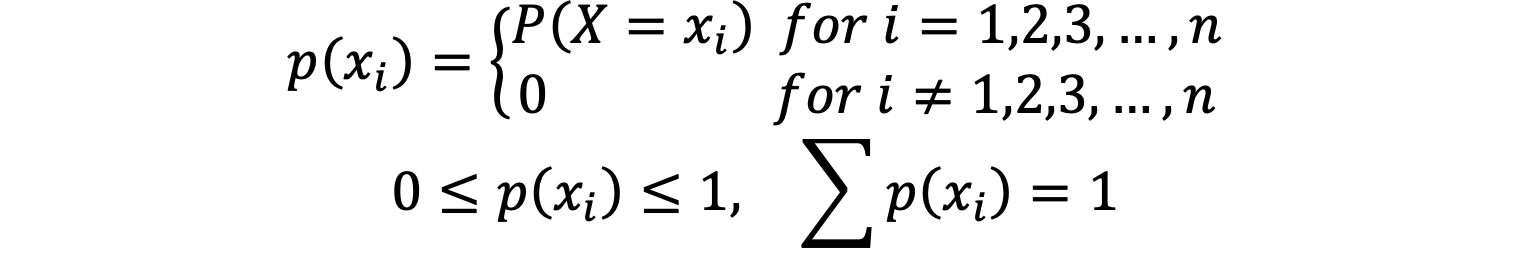

- Probability Mass Function (P.M.F.): PMF assigns a probability to every possible variable specific to the data attribute. If X is a discrete random variable with probabilities p1, p2, …, pn corresponding to the random variable x1, x2, …, xn, then PMF of X is given by

- Cumulative Distribution Function (C.D.F.):

For Continuous Probability Distribution:

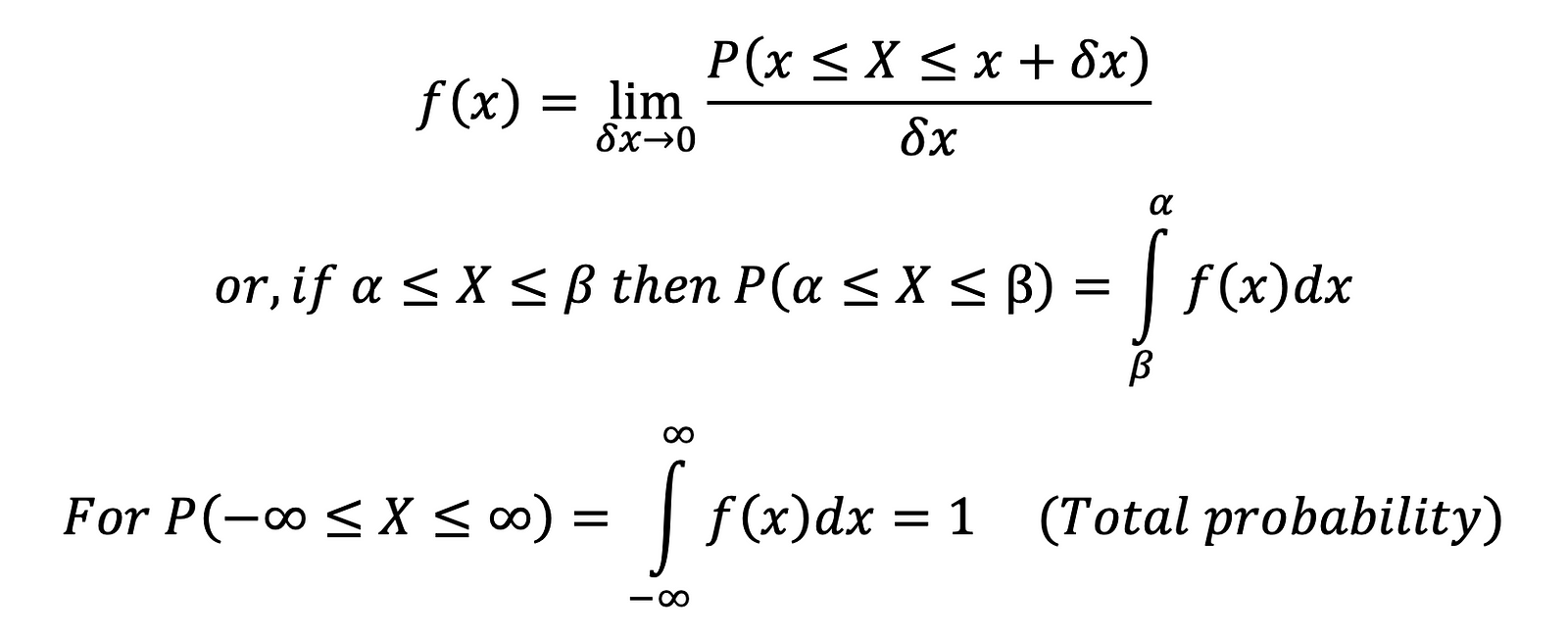

- Probability Density Function (P.D.F.): The probability of continuous variables is defined using PDF. The probability of every possible continuous value has to be greater than or equal to zero but not preferably less than or equal to 1, as a continuous value is not finite.

Let 𝑋 is a continuous random variable then its probability density function is given by

Note:In the case of a discrete random variable, the probability at any point is not equal to zero, but in the case of a continuous random variable, the probability at one point is always zero, i.e., 𝑃(𝑋 = 𝑐) = 0. Thus, we conclude that in the case of a continuous random variable,

𝑃(𝑎 ≤ 𝑋 ≤ 𝑏) = 𝑃(𝑎 < 𝑋 < 𝑏) = 𝑃(𝑎 ≤ 𝑋 < 𝑏) = 𝑃(𝑎 < 𝑋 ≤ 𝑏)

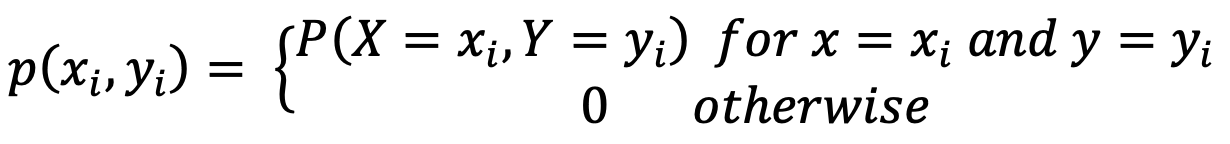

If two or more random variables are given, and we want to determine the probability distribution, then joint probability distribution is used.

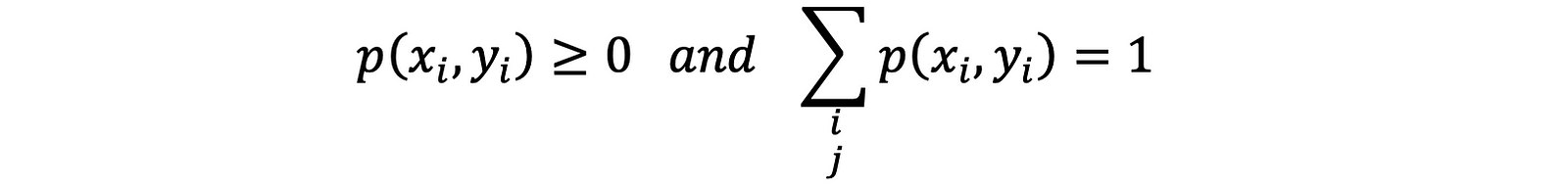

For Discrete random variables, Joint PMF is given by

where,

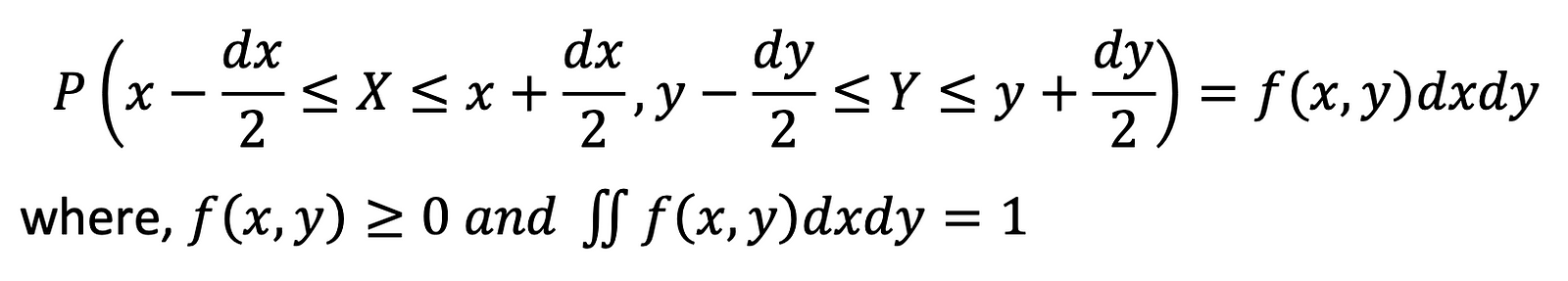

For continuous random variables, Joint PDF is given by :

If the probability distribution is to be defined only on a subset of variables, the marginal probability distribution is used. It is beneficial if the probability is estimated on only a specific input variable set when given the other input values.

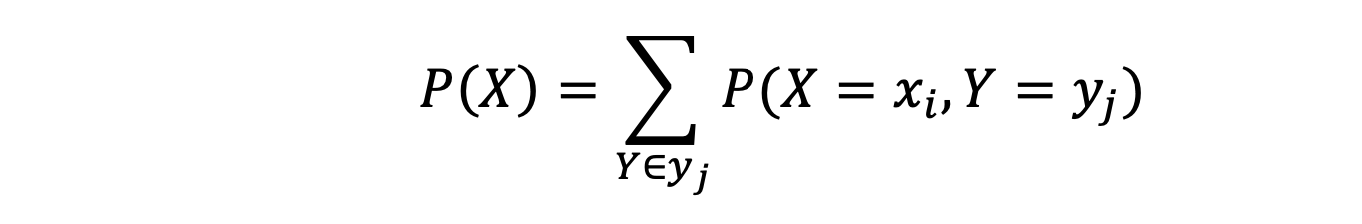

For discrete variables, the marginal distribution function of X is

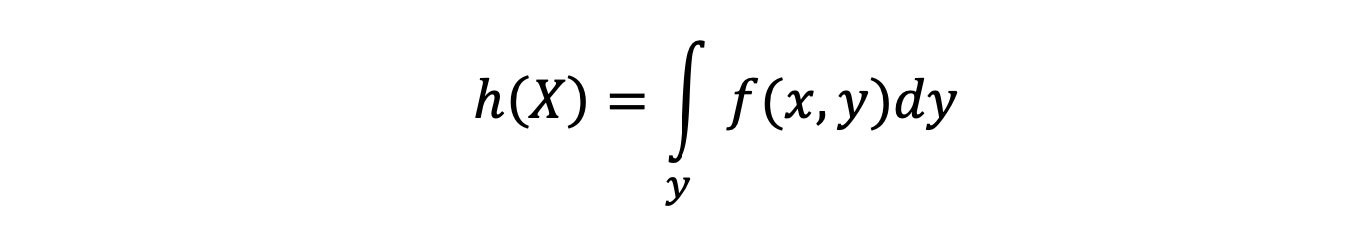

For continuous variables, the marginal distribution function of X is

Sometimes, there are cases where we have to compute the probability of an event when a different event happens. This probability distribution is termed as conditional probability distribution.

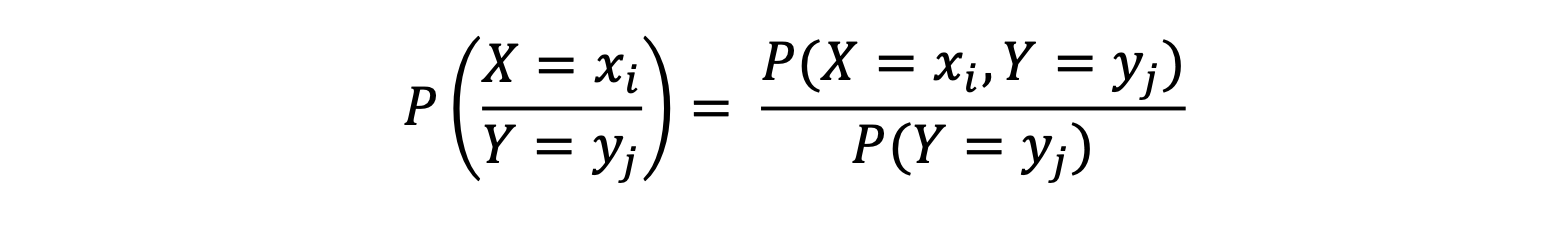

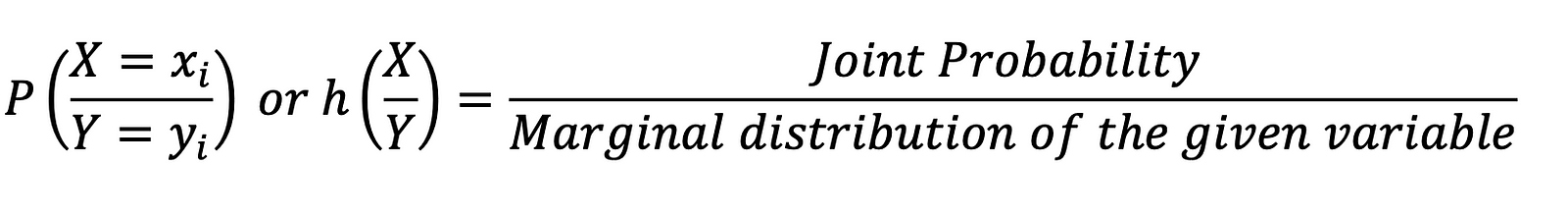

For discrete random variables, the conditional distribution of X when Y is given:

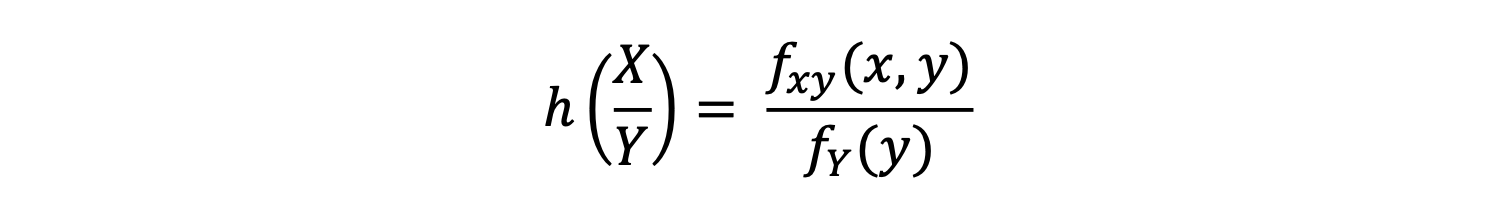

For continuous variables, the conditional distribution function of X when Y is given:

Thus, it can be concluded that the conditional distribution function for any variable is equal to:

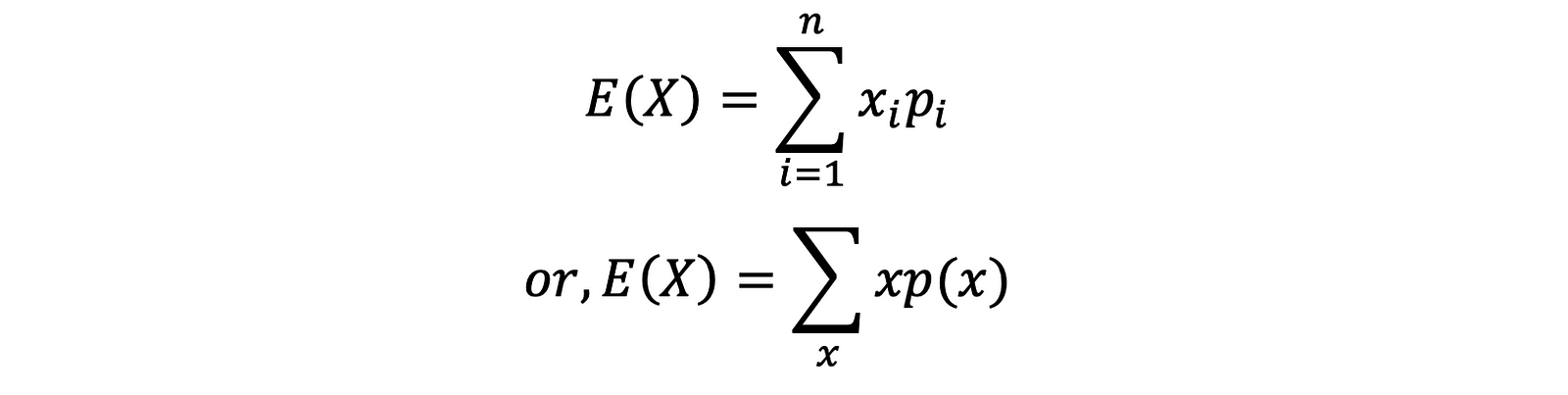

Mathematical Expectation:

The expectation is a name given to a process of averaging when a random variable is involved.

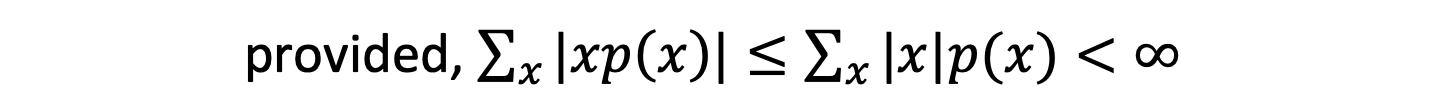

If X be a discrete random variable defined by the values 𝑥1, 𝑥2, …, 𝑥𝑛 with the corresponding probabilities 𝑝1, 𝑝2, …, 𝑝𝑛 then the expectation of X or expected value of X is given by

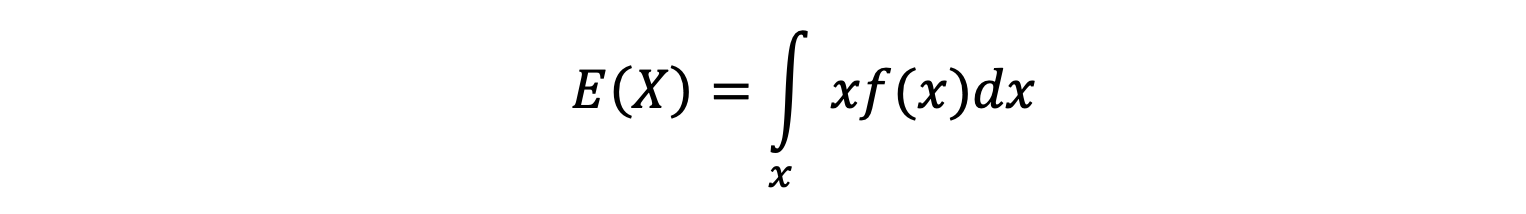

If X be a continuous random variable with probability density function 𝑓(𝑥), then the expected value of X or expectation of X is given by

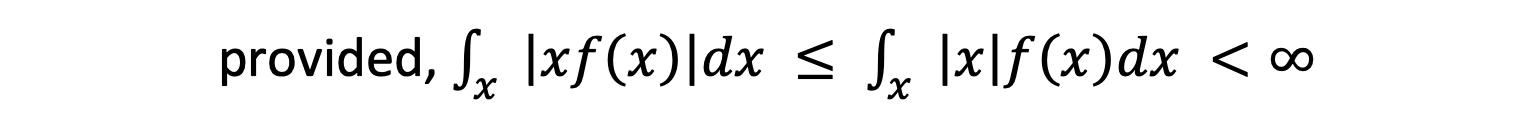

Variance: Variance means variability of random variables. It measures the average degree to which each number is different from the mean.

Mathematically, let X be any random variable (discrete or continuous) with 𝑓(𝑥) as its PMF or PDF, then the variance of X [denoted as var(X) or 𝜎2] is given by

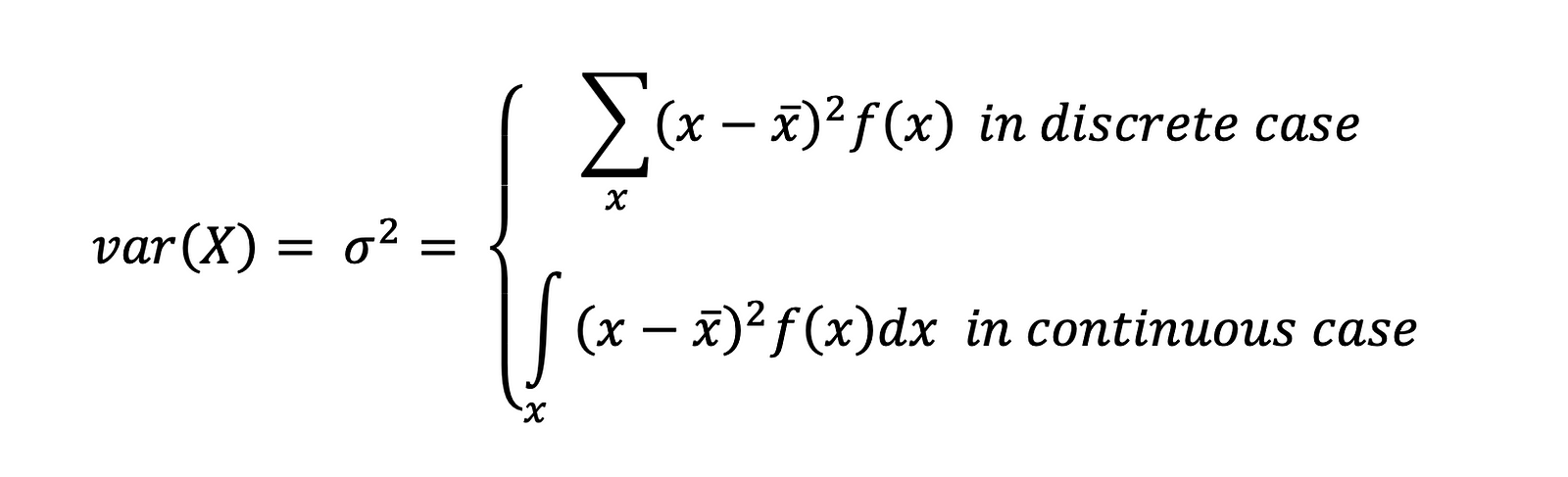

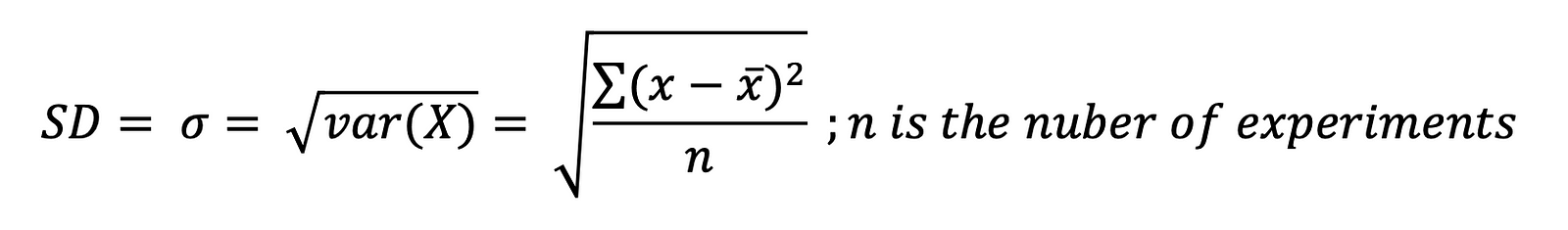

Standard Deviation

Standard deviation [denoted as SD or 𝜎] is a statistic that looks at how far from the mean a group of numbers is by using the square root of the variance.

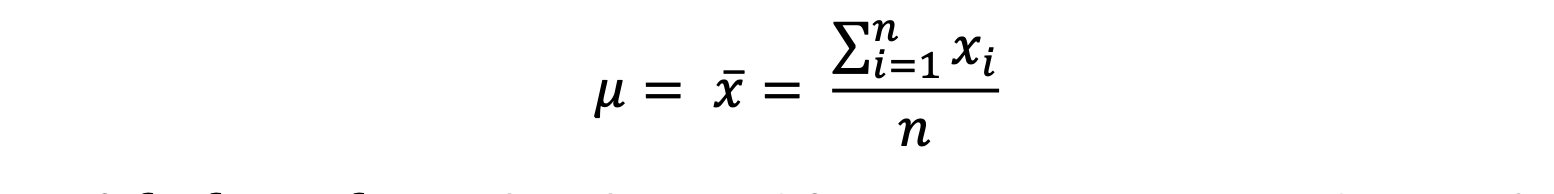

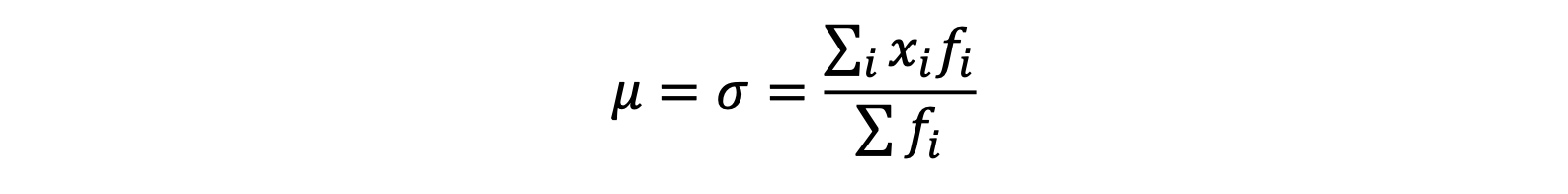

Mean (𝝁 𝒐𝒓 𝝈)

If 𝑥1, 𝑥2, …, 𝑥𝑛 are the 𝑛 variables then,

Or, if 𝑓1, 𝑓2, …, 𝑓n are the observed frequencies corresponding to the variables 𝑥1, 𝑥2, …, 𝑥𝑛 respectively then,

Probability Distribution:

We know that Probability Distribution is the listing of the probabilities of the events that occurred in random experiments. Probability Distribution is of two types: i) Discrete Probability Distribution and ii) Continuous Probability Distribution. These can be further divided as:

- Discrete Probability Distribution

l) Bernoulli Distribution

ll) Binomial Distribution

lll) Poisson’s Distribution - Continuous Probability Distribution

l) Normal or Gaussian Distribution

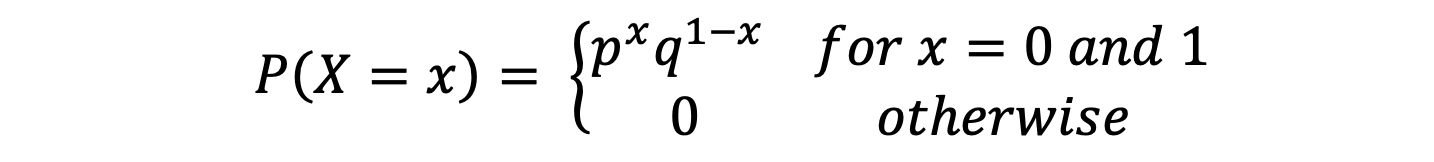

Bernoulli Distribution: The Bernoulli Distribution is a discrete distribution where the random variable 𝑋 takes only two values 1 and 0 with probabilities 𝑝 and 𝑞 where 𝑝 + 𝑞 = 1. If 𝑋 is a discrete random variable, then 𝑋 is said to have Bernoulli distribution if

Here 𝑋 = 0 stands for failure and 𝑋 = 1 for success.

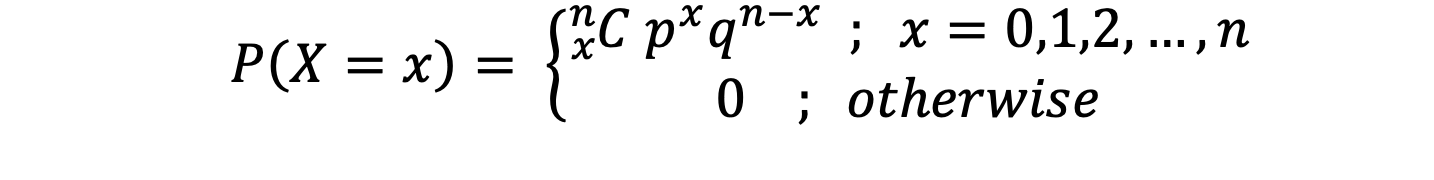

Binomial Distribution: The Binomial Distribution is an extension of Bernoulli Distribution. There are 𝑛 numbers of finite trails with only two possible outcomes, The Success with probability 𝑝 and The Failure with q probability. In this distribution probability of success or failure does not change from trail to trail, i.e., trails are statistically independent. Thus, mathematically a discrete random variable is said to be Binomial Distribution if

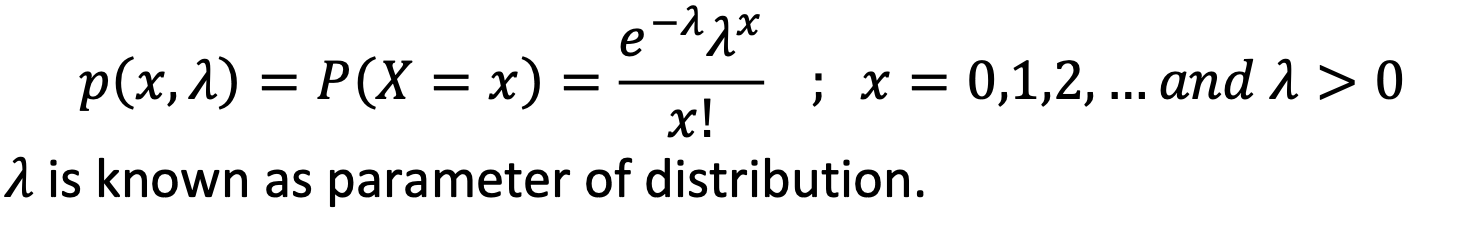

Poisson’s Distribution: The Poisson’s Distribution is limiting case of Binomial Distribution when the trails are infinitely large, i.e., 𝑛 → ∞, 𝑝, the constant probability of success of each trail is indefinitely small, i.e., 𝑝 → 0 and the mean of Binomial distribution [𝑛𝑝 = 𝜆] is finite.

Mathematically, a random variable 𝑋 is said to be a Poisson Distribution if it assumes only non-negative values and its PMF is given by

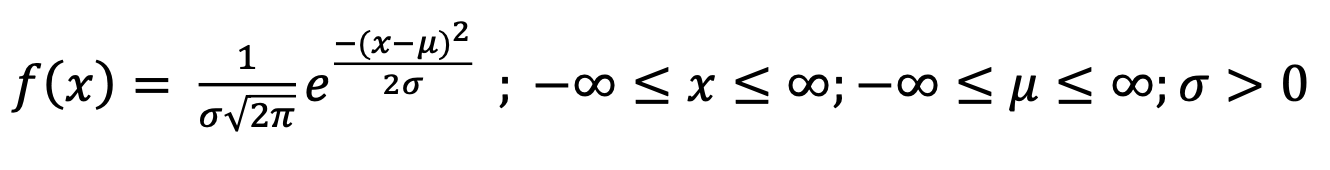

Normal Distribution: Normal Probability Distribution is one of the most important probability distributions mainly due to two reasons:

a) It is used as a sampling distribution.

b) It fits many natural phenomena, which include human

characteristics such as height, weight, etc.

The Normal Distribution is a probability function that describes how the values of a variable are distributed. It is a systematic distribution where most of the observations cluster around the central peak and the probabilities for values further away from the mean taper off equally in both directions.

Mathematically it can be said that, if 𝑋 is a continuous random variable, then 𝑋 is said to have normal probability distribution if its PDF is given by

This can also be denoted by 𝑁(𝜇, 𝜎2).

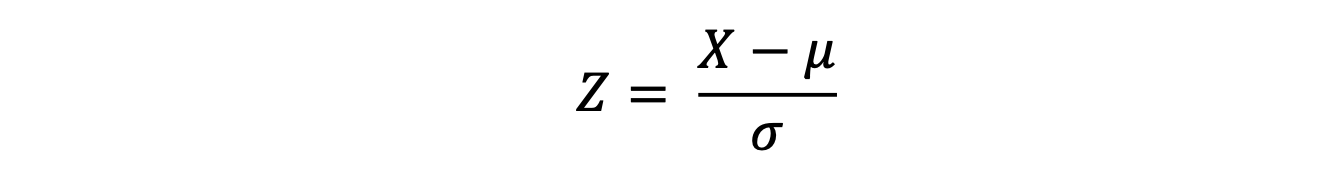

When 𝜇 = 0 and 𝜎 = 1, the distribution becomes a special case called Standard Normal Distribution.

Any normal distribution can be converted into a Standard normal distribution using the formula.

Which is called ‘Standard Normal Variate’, and the 𝑍 here represents the Z-distribution.

Share Your Insights

Self-paced Courses and Blogs

Coding Interview

OOP Concepts

Our Newsletter

Subscribe to get well designed content on data structure and algorithms, machine learning, system design, object orientd programming and math.

©2023 Code Algorithms Pvt. Ltd.

All rights reserved.