Which Problems can be Solved Using Dynamic Programming?

There are a lot of variations available to dynamic programming problems. Sometimes, programmers face challenges in verifying whether the given problem is a dynamic programming problem or not. Even sometimes it may take significant time also.

So understanding this idea is important because once we decide that the given problem can be solved using dynamic programming, it will take a totally different solution path. In practice, there are two popular categories of problems that can be solved using dynamic programming: 1) Optimization problems and 2) Counting problems.

1. Optimization problem

In an optimization problem, we need to find an optimal solution (minimum, maximum, longest, shortest, etc.) from a large solution space, where each solution has a value. A brute force solution idea is to explore every possible solution using recursion and identify optimal solutions among them. But unfortunately, the total number of subproblems may be more than expected due to repeated occurrences of the same sub-problem.

Optimization problem example 1: The longest common subsequence problem

Given two array strings X[] and Y[] of size m and n, write a code to find the length of the longest common sequence. A common subsequence of two strings is a subsequence that is common to both strings. If there is no common subsequence, return 0.

There can be multiple possible common subsequences of the longest length but need to just return the length of the longest common subsequence. So this is an optimization problem where the solution space is very large. If string X has size m, then there will be 2^m — 1 possible subsequence. How? The idea is simple!

There would be two choices for each character in a string: Either it is part of the subsequence or not part of the subsequence. To find the total number of subsequences, we need to multiply the possibility for all m characters, which is 2^m (multiply 2 m number of times). If we ignore the possibility of empty subsequences, the total number of subsequences = 2^m — 1. Similarly, string Y of size n has 2^n — 1 subsequence.

Let's understand this with an example.

Suppose X[] = "ABC" which has three characters.

If we take 1 when character is part of the

subsequence and take 0 not part of the subsequence.

Then we can generate all 8 subsequences by masking

the input string with the bits of all 3 size bianry numbers.

A B C

0 0 0 => Empty

0 0 1 => C

0 1 0 => B

0 1 1 => BC

1 0 0 => A

1 0 1 => AC

1 1 0 => AB

1 1 1 => ABC

If we have m size string then total count

of m size bianry numbers will be 2^m.One basic idea would be to explore all possible subsequences of both strings, compare each subsequence of X with each subsequence of Y and find the common and longest among them. So this solution would be exponential time and highly inefficient.

We can also see the pattern of overlapping and repeated sub-problems when we design a recursive solution. This is also one of the reasons that our solution space is exponential time. So we can use the top-down or bottom-up approach of DP and optimize the time complexity to O(mn) time using O(mn) space. Even we can move one step further and solve this problem in O(n) space.

Optimization problem example 2: The max subarray sum problem

Given an array A of size n containing positive and negative integers, the goal is to determine indices i and j, 1 <= i <= j <= n, such that A[i] + A[i + 1] + … + A[j] is a maximum. In other words, the goal is to find contiguous array values A[i], A[i +1], . . . , A[j] such that their sum A[i] + A[i + 1] + … + A[j] is maximized.

This is an optimization problem since we want to optimize, i.e., maximize the sum from all possible solution space. The solution space for this is the set of all pairs of indices i and j, 1 <= i <= j <= n, with each pair giving rise to a sum. Thus there are n² such sums.

Note that all these sums are solution candidates for the problem (the optimum is the one which is the maximum sum). These are called feasible solutions. Feasible solutions are those that satisfy the constraints of the problem. Here the constraint is that the array sum has to be over a contiguous subarray, i.e., it includes all array values between indices i and j (including A[i] and A[j]).

It is easy to see that the problem can be solved by calculating all the possible n² array sums and taking the maximum among those. This takes at least n² operations; actually, even more since to compute a particular sum A[i] + · · · + A[j] requires j − i addition operations. It is not difficult to calculate that the total number of addition operations over all the O(n²) array sums is O(n³). Using divide and conquer, one can design O(nlog n), but using DP, we can develop a simple and elegant O(n) time algorithm that is also easy to implement.

Other examples of optimization problems

- Longest Increasing subsequence

- Longest Palindromic Substring

- Max Product Subarray

- Maximal square

- Minimum coin change problem

- Minimum number of jumps to reach the end

2. Counting Problem

In this type of problem, we need to count all occurrences of a typical pattern or constraint. So divide and conquer solutions need to explore all possible ways recursively, which could lead to many recursive calls due to repeated subproblems.

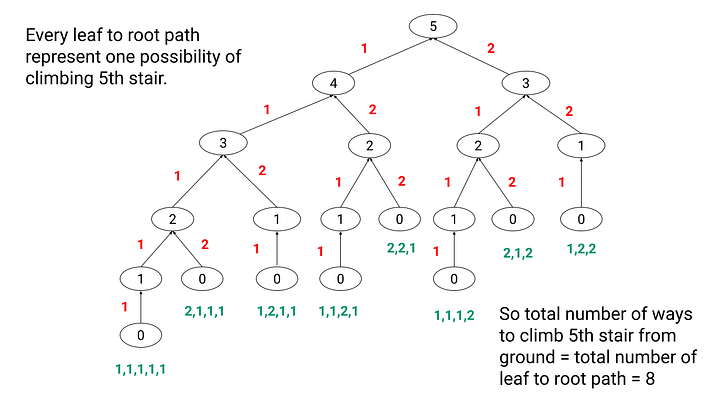

Counting problems Example 1: Count the number of ways to reach the nth stair

There is a staircase of n steps and you can climb either 1 or 2 steps at a time. We need to count and return the total number of unique ways to climb the top of the staircase. The order of steps matters.

This is a counting problem and there are several ways to reach the nth stair. If we think recursively, there are two ways to reach the nth stair: Either from (n-1)th stair by taking 1 step or from (n-2)th stair by taking 2 steps. So total number of ways to reach nth stair = Total number of ways to reach (n-1)th stair + Total number of ways to reach (n-2)th stair.

So recurrence relation is: climb(n) = climb(n-1) + climb(n-2), where climb(1) = 1 and climb(2) = 2. This looks similar to recurrence relation of the Fibonacci series so we can apply the idea of dynamic programming.

Counting problems Example 1: Count paths from the top left to the bottom right of an MxN matrix

You have given an NxM grid, and you are at the starting point i.e. 1st row and 1st column or (0,0). You can move either downward(D) or rightward(R). So problem is to find the total number of paths starting from the first cell to the last cell i.e. (N, M).

Solution via Combinatorics

We can observe one thing clearly: if we have to reach form (0,0) to (N, M), we have to move N steps downward, and M steps rightward. So, how do we formulate the solution and count the total number of paths? If we look carefully at every cell, we have two choices: either move downward or rightward. But there is one constraint: These choices must have N downward and M rightward choices as then only we can reach the last cell. For example, we can reach the last cell if we go RRDRDRDDRD…….up to N D’s and M R’s (here R represents right and D down).

The problem can be solved by simple combinatorics. Basically, every path is made up of N downward movement and M rightward movement. As we need only N step to reach the bottom out of those (N+M) steps, the paths differ from each other in the choice of the N downward movement out of the (N+M) possibilities. So, the total number of paths can be obtained as the number of ways to choose N out of (N+M) different things, that is, as C(M+N, N) = (M + N)!/(N!)(M!).

We can also think in another way! We can reach the right end using M steps, out of (N+M) steps. So, the total number of paths can be obtained as the number of ways to choose M out of (N+M) different things, that is, as C(M+N, M) = (M + N)!/(N!)(M!). In both cases, the answer is the same i.e. (M + N)!/(N!)(M!).

Recursive solution: We can reach the cell (M, N) from (N-1, M) and (N, M-1) cells. So the total number of ways to reach (M, N)th cell = total number of ways to reach (N-1, M) th cell + the total number of ways to reach (N-1, M)th cell => pathCount(N,M) = pathCount(N-1,M)+pathCount(N,M-1)

As we can observe, the total number of paths can be large in numbers. If we design a recursive solution, a lot of sub-problems will be repeated and we can apply a dynamic programming strategy.

Other examples of counting problems

- Count ways to make the change

- Count of possible Binary Search Trees with n keys

- Count possible decodings of a given digit sequence

- Count the number of binary strings without consecutive 1’s

- Count all palindromic subsequences in a string

- Count of strings that can be formed using a, b and c under given constraints

- Count of different ways to express n as the sum of 1, 3 and 4

A critical note to identify a dynamic programming problem

If we have an optimization or counting problem, then one of the simple ideas would be to design a recursive solution, draw the recursion tree for some sample input and identify the repeated sub-problems scenario. If subproblems are repeated then the given problem can be solved using dynamic programming.

But the above approach may take little time because we have to go through two critical processes: designing the recursive solution and drawing a recursion tree! A critical question is: Is there a way to quickly identify such problems? Here is one idea using some math or combinatorics that can work efficiently in most scenarios.

When the given problem is an optimization or counting problem, we can apply combinatorics to count all the possible solution candidates. If the solution space is very large or exponential, that problem can be solved using dynamic programming. The reason is simple: recursion will have to explore all possible solution candidates, which is exponential in the count. Any such recursive solution may lead to repeated sub-problems scenarios.

Enjoy learning, Enjoy algorithms!

Share Your Insights

More from EnjoyAlgorithms

Self-paced Courses and Blogs

Coding Interview

OOP Concepts

Our Newsletter

Subscribe to get well designed content on data structure and algorithms, machine learning, system design, object orientd programming and math.

©2023 Code Algorithms Pvt. Ltd.

All rights reserved.